In this tutorial series, we'll learn how to build a small app with some big concepts. We'll cover reactive programming with Reactive Extensions (Rx*), JS framework component interaction in Angular, and speech recognition with the Web Speech API. The completed Madlibs app code can be found at this GitHub repo.

In thais part, we will cover the following:

- Integrating the Annyang library for speech recognition support

- Implementing a form component allowing users to speak or type words to use in the madlib story

Let's get started!

Web Speech Service

The first thing we'll do is create a service in our Angular app that we can use to interact with the Web Speech API via the Annyang library. Once we have a service that interfaces with speech recognition, we can build a component that listens to the user's voice and stores the words they speak for use in the app.

Generate a Service

Create a new service using the Angular CLI from the root of the

madlibs$ ng g service speech

We can use

ng gng generateNote: For brevity, this tutorial will not cover testing. The CLI automatically generates

files for testing. You can add your own tests, or choose not to generate these files by adding the.spec.tsflag to--no-speccommands. To learn more about testing in Angular, check out the following articles on testing components and testing services.ng g

Provide Speech Service in App Module

The service will be generated but you'll see a warning in the command line stating that it must be provided to be used. Let's do that now.

Open the

app.module.ts// src/app/app.module.ts ... import { SpeechService } from './speech.service'; @NgModule({ ... providers: [ SpeechService ], ...

We'll import our

SpeechServiceproviders@NgModuleSpeech Service Functionality

Before we start coding, let's plan what we want our Speech service to do. The speech recognition feature in our app should work like this:

- After granting microphone access in the browser, the user can click a button that will allow the app to start listening to what they will say.

- The user says what part of speech they want to enter followed by a word, such as:

,"verb running"

,"noun cat"

, etc."adjective red" - The words are filled into editable form fields so the user can modify them if desired.

- If speech recognition did not understand what the user said, the user should be shown a message asking them to try again.

- The user can click a button to tell the browser to stop listening.

On the technical side, our Speech service needs to do the following:

- Interface Angular with the Annyang library.

- Check whether Web Speech is supported in the user's browser.

- Initialize Annyang and set up the different speech commands we'll be listening for (e.g.,

, etc.).verb [word] - Create and update a stream of the words as Web Speech recognizes the user's spoken commands.

- Handle errors with another stream, including if the user does not grant microphone permissions or if the Web Speech API does not recognize what the user said.

- Functions to start and stop listening with the user's microphone.

Speech Service Class

Now let's write the code. Open the

speech.service.ts// src/app/speech.service.ts import { Injectable, NgZone } from '@angular/core'; import { Subject } from 'rxjs/Subject'; // TypeScript declaration for annyang declare var annyang: any; @Injectable() export class SpeechService { words$ = new Subject<{[key: string]: string}>(); errors$ = new Subject<{[key: string]: any}>(); listening = false; constructor(private zone: NgZone) {} get speechSupported(): boolean { return !!annyang; } init() { const commands = { 'noun :noun': (noun) => { this.zone.run(() => { this.words$.next({type: 'noun', 'word': noun}); }); }, 'verb :verb': (verb) => { this.zone.run(() => { this.words$.next({type: 'verb', 'word': verb}); }); }, 'adjective :adj': (adj) => { this.zone.run(() => { this.words$.next({type: 'adj', 'word': adj}); }); } }; annyang.addCommands(commands); // Log anything the user says and what speech recognition thinks it might be // annyang.addCallback('result', (userSaid) => { // console.log('User may have said:', userSaid); // }); annyang.addCallback('errorNetwork', (err) => { this._handleError('network', 'A network error occurred.', err); }); annyang.addCallback('errorPermissionBlocked', (err) => { this._handleError('blocked', 'Browser blocked microphone permissions.', err); }); annyang.addCallback('errorPermissionDenied', (err) => { this._handleError('denied', 'User denied microphone permissions.', err); }); annyang.addCallback('resultNoMatch', (userSaid) => { this._handleError( 'no match', 'Spoken command not recognized. Say "noun [word]", "verb [word]", OR "adjective [word]".', { results: userSaid }); }); } private _handleError(error, msg, errObj) { this.zone.run(() => { this.errors$.next({ error: error, message: msg, obj: errObj }); }); } startListening() { annyang.start(); this.listening = true; } abort() { annyang.abort(); this.listening = false; } }

We'll start by importing a couple of additional modules: NgZone and Subject from RxJS. We'll talk about both of these in more detail shortly.

We'll

declareannyanganyIn our

SpeechServicewords$errors$Note: Using a

at the end of a variable is popular notation to indicate an observable.$

words$ = new Subject<{[key: string]: string}>(); errors$ = new Subject<{[key: string]: any}>();

To do this, we'll use subjects. Rx Subjects act as both observer and observable. This means that subjects can be subscribed to (observable). Subjects also have

next()error()complete()words$errors$Each

new Subject<T>()words$stringstringerrors$stringanyWe'll create a

listeningfalseconstructor()zoneNgZoneWe can then create a

speechSupportedNext we'll create an

init()init() { const commands = { 'noun :noun': (noun) => { this.zone.run(() => { this.words$.next({type: 'noun', 'word': noun}); }); }, 'verb :verb': (verb) => { this.zone.run(() => { this.words$.next({type: 'verb', 'word': verb}); }); }, 'adjective :adj': (adj) => { this.zone.run(() => { this.words$.next({type: 'adj', 'word': adj}); }); } }; annyang.addCommands(commands); ...

These are the voice commands that we'll listen for. When we want to set up speech recognition in a component, we can call the Speech service's

init()addCommands()To create commands, we'll build a

commandsnoun :nounWe'll listen for the user to say the word "noun", followed by a named variable (e.g.,

:nounNote: We aren't specifically verifying that the spoken

is truly a noun. That is beyond the scope of our app and would require dictionary lookups. However, I encourage you to investigate expanding the Madlibs app's functionality on your own with dictionary APIs such as Oxford, Merriam-Webster, Words API, and more.:noun

Angular Zones

When this command is recognized, we'll use an Angular zone method called

run()words$Angular uses zones to tell the framework when something happens that is outside its zone. We can also use zones to deliberately run code outside the Angular framework. This can be done to improve performance when asynchronous tasks should be run and don't require UI updates or error handling in Angular. You can read more about how NgZone works in its documentation.

In our case, the Annyang library and Web Speech API naturally execute functions outside the Angular zone. However, we need to make sure Angular knows about the outcomes of these functions. To do so, we can reenter the Angular zone and synchronously execute these functions and return their values by using NgZone's

method.run()

Inside the function that will run in the Angular zone, we'll use the RxJS observer

next()words$typewordFinally, we'll use Annyang's

addCommands()commandsAnnyang Callbacks

The last thing we'll do in our

init()Note: You can check out the different callbacks that Annyang supports in the documentation.

init() { ... // Log anything the user says and what speech recognition thinks it might be // annyang.addCallback('result', (userSaid) => { // console.log('User may have said:', userSaid); // }); annyang.addCallback('errorNetwork', (err) => { this._handleError('network', 'A network error occurred.', err); }); annyang.addCallback('errorPermissionBlocked', (err) => { this._handleError('blocked', 'Browser blocked microphone permissions.', err); }); annyang.addCallback('errorPermissionDenied', (err) => { this._handleError('denied', 'User denied microphone permissions.', err); }); annyang.addCallback('resultNoMatch', (userSaid) => { this._handleError( 'no match', 'Spoken command not recognized. Say "noun [word]", "verb [word]", OR "adjective [word]".', { results: userSaid }); }); } private _handleError(error, msg, errObj) { this.zone.run(() => { this.errors$.next({ error: error, message: msg, obj: errObj }); }); }

The first block is commented out. If uncommented, this code adds a callback that logs all the possibilities for what speech recognition thinks the user may have said. While not practical for our app's final presentation, it's a fun way to experiment with the Web Speech API during development. Uncomment this block to see what the browser interprets, and comment it back out (or delete it) later.

Each callback passes data to a

_handleError()errors$words$run()_handleError()startListening() { annyang.start(); this.listening = true; } abort() { annyang.abort(); this.listening = false; }

Finally, we'll add

startListening()abort()That's it for our Speech service!

Listen Component

Now we need to build a component that uses the Speech service to listen to the user's spoken input.

First let's add a couple of image assets. Create a new folder called

imagessrc/assetsSave them to your

src/assets/imagesNow use the following Angular CLI command to generate a new component:

$ ng g component listen

This creates and declares the component for us. The first iteration of our Listen Component will simply use the Speech service to listen to user input and subscribe to the subjects we created to log some information. This component will get more complex as we build out our app (interfacing with form inputs, supporting API word generation, etc.), but this is a good place to start.

Add Listen Component to App Component

The first thing we'll need to do now is display our component somewhere so we can view it in the browser.

Open your

app.component.ts// src/app/app.component.ts ... import { SpeechService } from './speech.service'; ... export class AppComponent { constructor(public speech: SpeechService) {} }

Before we add the Listen component, we'll import the

SpeechServiceconstructor()speechSupportedOpen up your

app.component.html<!-- src/app/app.component.html --> <div class="container"> <h1 class="text-center">Madlibs</h1> <app-listen *ngIf="speech.speechSupported"></app-listen> </div>

We'll do most of our development in Google Chrome since it supports Web Speech well at the time of writing. Make sure your app's server is running with

ng serveListen Component Class

Open the

listen.component.ts// src/app/listen/listen.component.ts import { Component, OnInit, OnDestroy } from '@angular/core'; import { SpeechService } from './../speech.service'; import { Subscription } from 'rxjs/Subscription'; import 'rxjs/add/operator/filter'; import 'rxjs/add/operator/map'; @Component({ selector: 'app-listen', templateUrl: './listen.component.html', styleUrls: ['./listen.component.scss'] }) export class ListenComponent implements OnInit, OnDestroy { nouns: string[]; verbs: string[]; adjs: string[]; nounSub: Subscription; verbSub: Subscription; adjSub: Subscription; errorsSub: Subscription; errorMsg: string; constructor(public speech: SpeechService) { } ngOnInit() { this.speech.init(); this._listenNouns(); this._listenVerbs(); this._listenAdj(); this._listenErrors(); } get btnLabel(): string { return this.speech.listening ? 'Listening...' : 'Listen'; } private _listenNouns() { this.nounSub = this.speech.words$ .filter(obj => obj.type === 'noun') .map(nounObj => nounObj.word) .subscribe( noun => { this._setError(); console.log('noun:', noun); } ); } private _listenVerbs() { this.verbSub = this.speech.words$ .filter(obj => obj.type === 'verb') .map(verbObj => verbObj.word) .subscribe( verb => { this._setError(); console.log('verb:', verb); } ); } private _listenAdj() { this.adjSub = this.speech.words$ .filter(obj => obj.type === 'adj') .map(adjObj => adjObj.word) .subscribe( adj => { this._setError(); console.log('adjective:', adj); } ); } private _listenErrors() { this.errorsSub = this.speech.errors$ .subscribe(err => this._setError(err)); } private _setError(err?: any) { if (err) { console.log('Speech Recognition:', err); this.errorMsg = err.message; } else { this.errorMsg = null; } } ngOnDestroy() { this.nounSub.unsubscribe(); this.verbSub.unsubscribe(); this.adjSub.unsubscribe(); this.errorsSub.unsubscribe(); } }

Let's step through this code. First we have our imports. In addition to

OnInitOnDestroySpeechServiceSubscriptionfiltermapOur component

implements OnInit, OnDestroynounsverbsadjswords$string[]We also need to subscribe to the

errors$errorsSuberrorMsgIn the

constructor()SpeechServicepublicThe

ngOnInit()SpeechServiceinit()words$errors$We'll have buttons in the UI to start and stop listening. We'll change the text on the "Listen" button to indicate to the user whether the app is actively listening or not. To determine this, we'll use an accessor method called

btnLabel()listening"Listening...""Listen"Next we have the three methods that set up speech recognition subscriptions:

_listenNouns()_listenVerbs()_listenAdjs()_listenNouns()private _listenNouns() { this.nounSub = this.speech.words$ .filter(obj => obj.type === 'noun') .map(nounObj => nounObj.word) .subscribe( noun => { this._setError(); console.log('noun:', noun); } ); }

This function filters and maps the

words$filtertype'noun'mapwordsubscribe()_setError()console.logWe'll create two similar functions for verbs and adjectives. You may notice that our subscriptions, right now, don't perform any real functionality. That's fine because at the moment, we just want to make sure our speech recognition works. Integrating with a form and limiting the number of words of each type is something we'll do a little later.

Next we'll create our errors subscription and the

_setError()private _listenErrors() { this.errorsSub = this.speech.errors$ .subscribe(err => this._setError(err)); } private _setError(err?: any) { if (err) { console.log('Speech Recognition:', err); this.errorMsg = err.message; } else { this.errorMsg = null; } }

The

errorsSuberrors$_setError()errerrorMsgerr_listenNouns()_listenVerbs()_listenAdjs()errorMsgnullThe last thing we'll do is use the

ngOnDestroy()ngOnDestroy() { this.nounSub.unsubscribe(); this.verbSub.unsubscribe(); this.adjSub.unsubscribe(); this.errorsSub.unsubscribe(); }

That's it for the first phase of our Listen component class!

Listen Component Template

Next we'll create the template for our Listen component. Open the

listen.component.html<!-- src/app/listen/listen.component.html --> <div class="alert alert-info mt-3"> <h2 class="text-center">Speak to Play</h2> <p>Your browser <a class="alert-link" href="https://developer.mozilla.org/en-US/docs/Web/API/SpeechRecognition#Browser_compatibility">supports speech recognition</a>! To play a madlib game using speech, follow these instructions:</p> <ol> <li>Click the <em>"Listen"</em> button below.</li> <li>If prompted, grant the app permission to use your device's microphone.</li> <li> Clearly say a <em>type</em> of word (also known as a "part of speech") followed by <em>one</em> word to fill in the form below. Here are some examples: <ul> <li><em>"noun <strong>cat</strong>"</em> (person, place, or thing)</li> <li><em>"verb <strong>jumping</strong>"</em> (action, present tense), <em>"verb <strong>ran</strong>"</em> (action, past tense)</li> <li><em>"adjective <strong>flashy</strong>"</em> (describing word)</li> </ul> </li> <li>Say <em>one command at a time</em>, then wait for the app to assess your speech to fill a madlib field. This could take a few seconds.</li> <li>Repeat until all fields are filled in.</li> </ol> <p>You may also <em>"Stop"</em> listening at any time and enter (or edit) words manually.</p> <div class="row mb-3"> <div class="col btn-group"> <button class="btn btn-primary col-6" (click)="speech.startListening()" [disabled]="speech.listening"> <img class="icon" src="/assets/images/mic-on.png"/>{{btnLabel}} </button> <button class="btn btn-danger col-6" (click)="speech.abort()" [disabled]="!speech.listening"> <img class="icon" src="/assets/images/mic-off.png"/>Stop </button> </div> </div> <div class="row" *ngIf="errorMsg"> <div class="col"> <p class="alert alert-warning"> {{errorMsg}} </p> </div> </div> </div>

This component only shows if the user's browser supports speech recognition, so we'll introduce how it works and display some instructions. Then we'll show two buttons to start and stop listening. On a

(click)startListening()abort()SpeechServiceNote: If you need a refresher, see the Angular documentation on binding syntax to read about interpolation and data binding in templates.

The start listening button will have the

btnLabel[disabled]speech.listeningsrc/assets/imagesFinally, we'll create a conditional alert to display errors. This element should only show if

errorMsgListen Component Styles

Most of our styling is done with Bootstrap, but let's add one SCSS ruleset in our

listen.component.scss/* src/app/listen/listen.component.scss */ .icon { display: inline-block; margin-right: 6px; vertical-align: middle; }

Now our microphone icons are aligned.

Playing With Speech Recognition

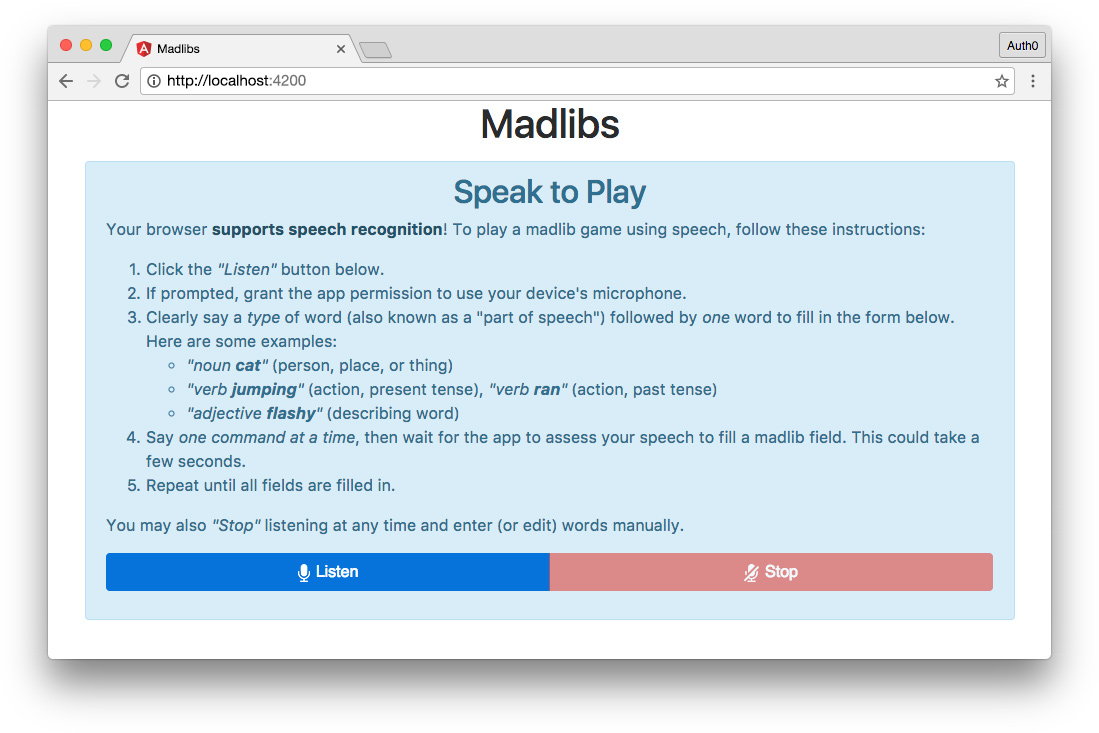

It's time to test out our speech recognition feature! The app should now look like this in the browser:

Note: Make sure you're using a browser that supports Web Speech API, such as Google Chrome.

Open the developer tools panel in the browser. This is where speech recognition will be logged. Then click the "Listen" button and grant your browser permission to use the microphone, if a popup appears. You can then speak the commands we set up and the results will be logged to the console. When you're finished experimenting with the Web Speech API, click the "Stop" button to stop listening. If everything works as expected, we can move on to the next step: adding a form where the user can edit words from speech recognition or manually enter their own.

Madlibs Service

Next let's create a service that provides various reuseable methods for our Madlibs app. Generate a service with the Angular CLI like so:

$ ng g service madlibs

Then open the new

madlibs.service.ts// src/app/madlibs.service.ts import { Injectable } from '@angular/core'; import { Subject } from 'rxjs/Subject'; @Injectable() export class MadlibsService { submit$ = new Subject<any>(); words: any; constructor() { } submit(eventObj) { // form submitted with form results this.submit$.next(eventObj); this.words = eventObj; } }

Let's import

Subjectsubmit$submit()submit$wordsProvide Madlibs Service in App Module

In order to use this service, we need to provide it. Open the

app.module.ts// src/app/app.module.ts ... import { MadlibsService } from './madlibs.service'; ... @NgModule({ ..., providers: [ ..., MadlibsService ], ...

Import the new

MadlibsServiceprovidersWords Class

Let's make a class that can create new instances of the arrays we'll need for storing words:

$ ng g class words

Open the new

words.ts// src/app/words.ts export class Words { constructor( public array: string[] = [] ) { for (let i = 0; i < 5; i++) { array.push(''); } } }

Our class,

Wordsnouns: string[] = new Words().array;

This creates a new instance of an array that looks like this:

[ '', '', '', '' , '' ]

Using a constructor and the

newWordWe can now utilize these classes in other components, so it's time to make some updates.

Update Listen Component

Recall that we're just logging words (as strings) to the console right now in our Listen component. It's time to update the component to store arrays of words.

Before we update the code itself, let's briefly review our goals. We want to:

- Store nouns, verbs, and adjectives in arrays of type

. There should be five words in each array.string[] - Show an error when a user tries to continue speaking words when there are already five for that part of speech.

- If the user manually deletes any words using the form, spoken commands should then fill in the missing words appropriately where there are openings.

Now let's develop the functionality to facilitate these goals.

Update Listen Component Class

Open the

listen.component.ts// src/app/listen/listen.component.ts ... import { Words } from './../word'; ... nouns: string[] = new Words().array; verbs: string[] = new Words().array; adjs: string[] = new Words().array; ... arrayFull: string; ... private _listenNouns() { ... .subscribe( ... this.nouns = this._updateWords('nouns', this.nouns, noun); } ); } ... private _listenVerbs() { ... .subscribe( ... this.verbs = this._updateWords('verbs', this.verbs, verb); } ); } ... private _listenAdj() { ... .subscribe( ... this.adjs = this._updateWords('adjectives', this.adjs, adj); } ); } ... private _updateWords(type: string, arr: string[], newWord: string) { const _checkArrayFull = arr.every(item => !!item === true); if (_checkArrayFull) { this.arrayFull = type; return arr; } else { let _added = false; this.arrayFull = null; return arr.map(item => { if (!item && !_added) { _added = true; return newWord; } else { return item; } }); } } ...

Let's go over this step by step.

The first change we'll make is to import our

Wordswords.tsnewWords().arraynouns: string[] = new Words().array; verbs: string[] = new Words().array; adjs: string[] = new Words().array;

This allows us to be certain that these arrays are entirely unique from one another, although they all share the same data shape.

Next we'll add a new property:

arrayFull: stringNext we'll update each of our

words$subscribe()console.logthis.nouns = this._updateWords('nouns', this.nouns, noun); this.verbs = this._updateWords('verbs', this.verbs, verb); this.adjs = this._updateWords('adjectives', this.adjs, adj);

We'll create this new

_updateWords()private _updateWords(type: string, arr: string[], newWord: string) { const _checkArrayFull = arr.every(item => !!item === true); if (_checkArrayFull) { this.arrayFull = type; return arr; } else { let _added = false; this.arrayFull = null; return arr.map(item => { if (!item && !_added) { _added = true; return newWord; } else { return item; } }); } }

This function takes the following arguments:

: a user-friendly string representing the part of speech, e.g.,type

, used for error messaging when the array is full"adjectives"

: the component property that is the array ofarr

s for the specific part of speech, e.g.,Wordthis.nouns

: the spoken word recognized by the Web Speech APInewWord

The first thing

_updateWords()every()''this.arrayFulltypeIf there are openings in the array for new words, we'll reset

this.arrayFullmap()Update Listen Component Template

Now that we have an

arrayFulllisten.component.html<!-- src/app/listen/listen.component.html --> ... <div class="row" *ngIf="errorMsg || arrayFull"> <div class="col"> <p class="alert alert-warning"> <ng-template [ngIf]="errorMsg">{{errorMsg}}</ng-template> <ng-template [ngIf]="arrayFull">You've already filled in all the available fields for <strong>{{arrayFull}}</strong>.</ng-template> </p> </div> </div> ...

In the element containing our error messaging, we'll update the

*ngIferrorMsg || arrayFullng-templateerrorMsgarrayFullNote: The Angular NgIf directive can be used with an

element if you don't want to render an extra container in the markup. In many cases, we already have a container wrapping whatever we want to show or hide, so we use<ng-template [ngIf]>.<div *ngIf>

Words Form Component

Whether or not the user's browser supports speech recognition, we'll need a component with a form that displays and allows manual entry and editing of words the user wants to use in their madlib.

Add Forms Module to App Module

We're going to create a form, so first we need to add the

FormsModuleapp.module.ts// src/app/app.module.ts ... import { FormsModule } from '@angular/forms'; ... @NgModule({ ..., imports: [ ..., FormsModule ], ...

We'll import

FormsModuleimportsCreate and Display Words Form Component

Let's create a Words Form component now:

$ ng g component words-form

We'll add this component to our Listen component. Open the

listen.component.html<!-- src/app/listen/listen.component.html --> ... <app-words-form [nouns]="nouns" [verbs]="verbs" [adjs]="adjs"></app-words-form>

Until we add our TypeScript, we'll receive an error when compiling because we've declared that the

<app-words-form>[nouns][verbs][adjs]Words Form Component Class

Once again, before we write our code, let's think about what we want to achieve.

- Show 5 input fields for each part of speech, making 15 fields total (nouns, verbs, adjectives).

- Each field needs placeholder text telling the user what kind of subject or tense should be used (such as

,person

,place

,present

, etc.).past - Create a

function that distinguishes each item in an array as unique.trackBy

Open the

words-form.component.ts// src/app/words-form/words-form.component.ts import { Component, Input } from '@angular/core'; import { MadlibsService } from './../madlibs.service'; @Component({ selector: 'app-words-form', templateUrl: './words-form.component.html', styleUrls: ['./words-form.component.scss'] }) export class WordsFormComponent { @Input() nouns: string[]; @Input() verbs: string[]; @Input() adjs: string[]; generating = false; placeholders = { noun: ['person', 'place', 'place', 'thing', 'thing'], verb: ['present', 'present', 'past', 'past', 'past'] }; constructor(private ml: MadlibsService) { } trackWords(index) { return index; } getPlaceholder(type: string, index: number) { return this.placeholders[type][index]; } done() { this.ml.submit({ nouns: this.nouns, verbs: this.verbs, adjs: this.adjs }); this.generating = true; } }

First we'll import Input and our

MadlibsServiceThe

@Input()<app-words-form>WordsFormComponent@Input() nouns: string[]; @Input() verbs: string[]; @Input() adjs: string[];

Next we'll create two properties. The first is simply a boolean called

generatingplaceholdersNote: Adjectives are excluded from this object because all adjective placeholders should be the same.

The constructor makes the

MadlibsServiceThe next method,

trackWords()trackByngFortrackWords()indexThe

getPlaceholder()typeindexplaceholdersFinally, the

done()submit()MadlibsServicegeneratingtrueWords Form Component Template

Open the

words-form.component.html<!-- src/app/words-form/words-form.component.html --> <form (submit)="done()" #wordsForm="ngForm"> <div class="row"> <div class="col-md-4"> <h3>Nouns <a class="badge badge-pill badge-info" href="http://www.grammar-monster.com/lessons/nouns.htm" title="Nouns are naming words, usually people, places, or things." target="_blank">?</a></h3> <p class="small">For best results, please enter 1 <strong>person</strong>, 2 <strong>places</strong>, and 2 <strong>things</strong>.</p> <div *ngFor="let noun of nouns; index as i; trackBy: trackWords" class="mb-2"> <input type="text" name="noun-{{i}}" class="form-control" [(ngModel)]="nouns[i]" [disabled]="generating" [placeholder]="getPlaceholder('noun', i)" required> </div> </div> <div class="col-md-4"> <h3>Verbs <a class="badge badge-pill badge-info" href="http://www.grammar-monster.com/lessons/verbs.htm" title="Verbs are doing words: physical action, mental action, or state of being." target="_blank">?</a></h3> <p class="small">For best results, use <strong>2 present tense</strong> verbs, then <strong>3 past tense</strong>.</p> <div *ngFor="let verb of verbs; index as i; trackBy: trackWords" class="mb-2"> <input type="text" name="verb-{{i}}" class="form-control" [(ngModel)]="verbs[i]" [disabled]="generating" [placeholder]="getPlaceholder('verb', i)" required> </div> </div> <div class="col-md-4"> <h3>Adjectives <a class="badge badge-pill badge-info" href="http://www.grammar-monster.com/lessons/adjectives.htm" title="Adjectives are describing words." target="_blank">?</a></h3> <p class="small">For best results, be particularly <strong>creative</strong> with your adjectives.</p> <div *ngFor="let adj of adjs; index as i; trackBy: trackWords" class="mb-2"> <input type="text" name="adj-{{i}}" class="form-control" [(ngModel)]="adjs[i]" [disabled]="generating" placeholder="adjective" required> </div> </div> </div> <div class="row"> <div class="col mt-3 mb-3"> <button class="btn btn-block btn-lg btn-success" [disabled]="!wordsForm.form.valid">Go!</button> </div> </div> </form>

Here we have the template-driven form. When words are spoken via the Web Speech API, they automatically fill in the appropriate fields. The user can also enter or edit the words manually. Let's go over this code more thoroughly.

The

<form>(submit)done()<button>We'll also add

#wordsForm="ngForm"wordsFormWe'll create three columns in our Words Form template UI using Bootstrap CSS. Each column contains a heading, a help link, short text instructions, and the input fields for a specific part of speech.

NgFor with Word Inputs

Let's discuss the NgFor loops that contain the input fields for words in each of the three arrays (nouns, verbs, and adjectives). The noun loop looks like this:

<div *ngFor="let noun of nouns; index as i; trackBy: trackWords" class="mb-2"> <input type="text" name="noun-{{i}}" class="form-control" [(ngModel)]="nouns[i]" [disabled]="generating" [placeholder]="getPlaceholder('noun', i)" required> </div>

The NgFor loop iterates over each item in the

nounsindex as itrackBytrackWordsThe

<input>nameinoun-0noun-1generating[placeholder]getPlaceholder()requiredSubmit Button

Last but not least, we'll add a

<button><div class="row"> <div class="col mt-3 mb-3"> <button class="btn btn-block btn-lg btn-success" [disabled]="!wordsForm.form.valid">Go!</button> </div> </div>

As mentioned earlier, this button doesn't need an event attached to it. It will execute the

<form (submit)="done()">[disabled]wordsFormwordsForm.form.validWords Form Component Styles

If we check out our form in the browser, you may notice that the placeholder text is very dark: it almost looks like normal text has already been inputted. We don't want to give that impression, so let's add a few styles in the

words-form.component.scss/* src/app/words-form/words-form.component.scss */ input::-webkit-input-placeholder { /* Chrome/Opera/Safari */ color: rgba(0,0,0,.25); opacity: 1; } input::-moz-placeholder { /* Firefox 19+ */ color: rgba(0,0,0,.25); opacity: 1; } input:-moz-placeholder { /* Firefox 18- */ color: rgba(0,0,0,.25); opacity: 1; } input:-ms-input-placeholder { /* IE 10+ */ color: rgba(0,0,0,.25); opacity: 1; }

These component styles override the Bootstrap defaults specifying the dark placeholder text color.

Try It Out

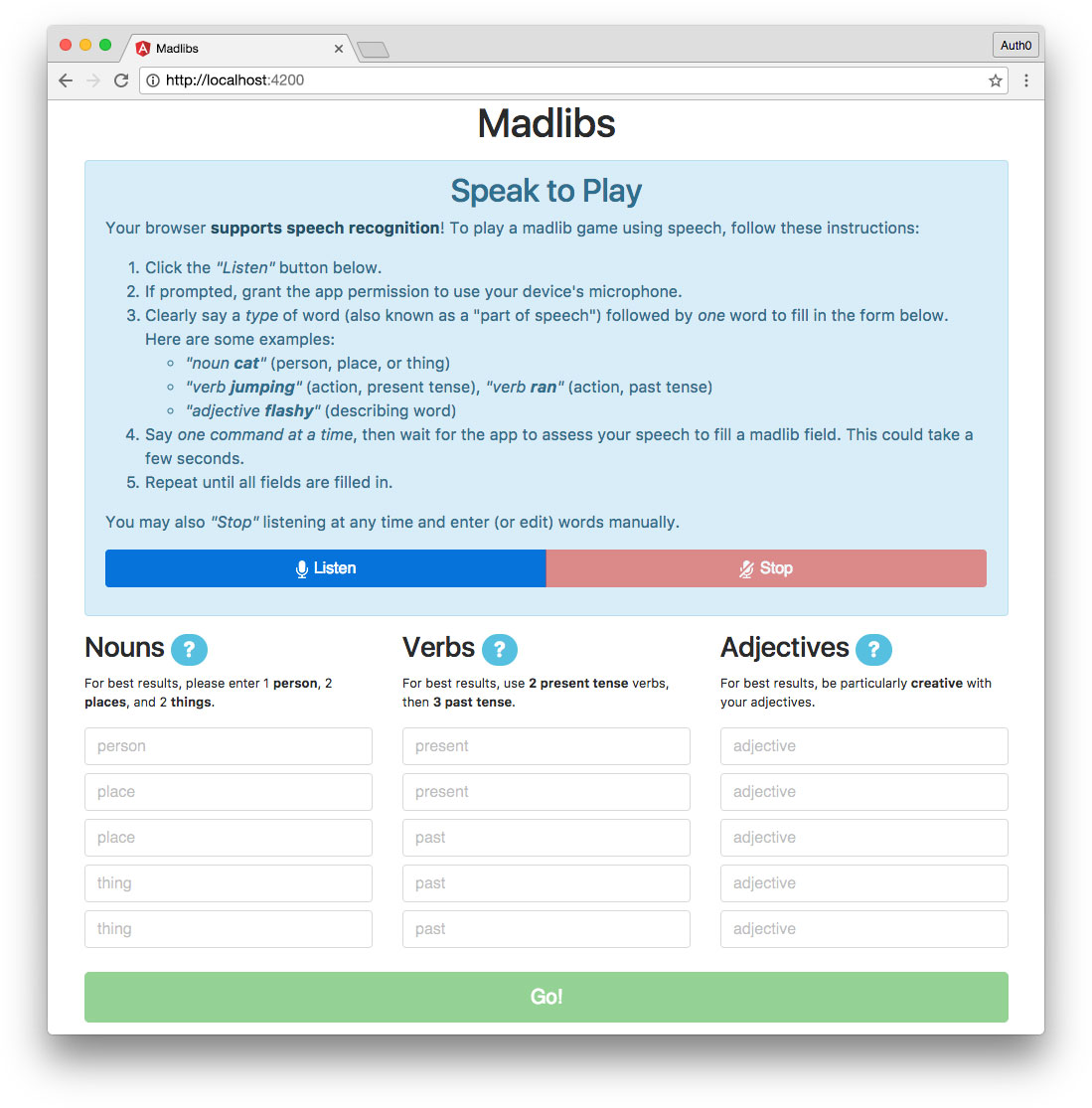

Our app should now look like this:

Now is a great time to test out the functionality of our Listen component and Words Form component. Try clicking the "Listen" button and issuing spoken commands. It takes a second or two for the Web Speech API to assess speech, but once it does, your spoken words should appear in the appropriate form fields.

Try a variety of things:

- Try saying things that are not the commands we set up.

- Try speaking additional words for a word type after all words have been filled in already.

- Try deleting a word or two manually and then using speech to fill them back in.

- Try typing in some words and then using speech to fill in the rest.

If we've done everything correctly, all of the above scenarios should be accounted for and the app should respond appropriately. Once all word fields have been populated, the "Go!" button to submit the form should enable (though it won't do anything visible yet).

If everything works as expected, congratulations! You've implemented speech recognition with Angular.

Aside: Securing Applications with Auth0

Are you building a B2C, B2B, or B2E tool? Auth0 can help you focus on what matters the most to you, the special features of your product. Auth0 can improve your product's security with state-of-the-art features like passwordless, breached password surveillance, and multifactor authentication.

We offer a generous free tier so you can get started with modern authentication.

Conclusion

In Part 1 of our 2-part tutorial, we covered basic concepts of reactive programming and observables and an introduction to speech recognition. We set up our Madlibs app and implemented speech recognition and an editable form where users can enter and modify words to eventually generate a madlibs story.

In the next part of our tutorial, we'll implement fallback functionality for browsers that don't support speech recognition. We'll also fetch words from an API if the user doesn't want to enter their own, and we'll learn about RxJS operators that make it easy to manage and combine observables. Of course, we'll also generate our madlib story with the user's words. As a final bonus, we'll learn how to authenticate an Angular app and Node API with Auth0.

When you're finished with both parts, you should be ready to tackle more advanced reactive programming projects with Angular!

About the author

Kim Maida

Group Manager, Developer Content