Rust has picked up a lot of momentum since we last looked at it in 2015. Companies like Amazon and Microsoft have adopted it for a growing number of use cases. Microsoft, for example, sponsors the Actix project on GitHub, which is a general purpose open source actor framework based on Rust. The Actix project also maintains a RESTful API development framework, which is widely regarded as a fast and performant web framework. Although the project was temporarily on hold in early 2020, the project ownership has moved to a new maintainer, and development continues.

In this article, we will explore the actix-web web framework by writing a small CRUD API using it. Our API will be backed by a Postgres database using Diesel. Finally, we will implement authentication for our API using Auth0.

Getting Started

The first step is to install Rust and all related tools. The community supported method is using Rustup, so that's what we'll use in this tutorial. The installation instructions are available here. During installation, select the default option (which should amend

$PATHcargo init --bin rust-blogpost-auth-async

This will create a directory with the given name and a few files in it. Let’s open the

Cargo.toml[package] name = "rust-blogpost-auth-async" version = "0.1.0" authors = ["First Last <no@gmail.com>"] edition = "2018" [dependencies] actix-web = "2.0.0" actix-web-httpauth = { git = "https://github.com/actix/actix-web-httpauth" } chrono = { version = "0.4.10", features = ["serde"] } derive_more = "0.99.2" diesel = { version = "1.4.2", features = ["postgres","uuidv07", "r2d2", "chrono"] } dotenv = "0.15.0" futures = "0.3.1" r2d2 = "0.8.8" serde = "1.0" serde_derive = "1.0" serde_json = "1.0" actix-service = "1.0.1" alcoholic_jwt = "1.0.0" reqwest = "0.9.22" actix-rt = "1.0.0"

We will explain why we need these dependencies as we move forward. Rust has recently implemented two new features which we'll see in action in this application. As shown in

Cargo.tomlSetting Up the API

In this tutorial, we will build an API that has a single resource. Our API should be able to create new users given a JSON input, display a given user given their user id, delete by a given id, and list all users. Thus, we will have the following endpoints:

— returns all usersGET /users

— returns the user with a given idGET /users/{id}

— takes in a JSON payload and creates a new user based on itPOST /users

— deletes the user with a given idDELETE /users/{id}

Cargo will create a barebone

main.rsactix_web// src/main.rs use actix_web::{web, App, HttpServer};

We will create four different routes in our application to handle the endpoints described previously. To keep our code well organized, we will put them in a different module called

handlersmain.rsmain.rsmod handlers;

Now our

main// src/main.rs // dependencies here // module declaration here #[actix_rt::main] async fn main() -> std::io::Result<()> { std::env::set_var("RUST_LOG", "actix_web=debug"); // Start http server HttpServer::new(move || { App::new() .route("/users", web::get().to(handlers::get_users)) .route("/users/{id}", web::get().to(handlers::get_user_by_id)) .route("/users", web::post().to(handlers::add_user)) .route("/users/{id}", web::delete().to(handlers::delete_user)) }) .bind("127.0.0.1:8080")? .run() .await }

The first important point to note here is that we are returning a

Resultmain?mainThe second thing to note is

asyncawaitThese are the two features mentioned earlier that we're able to use because we specified the 2018 version of Rust in

Cargo.tomlNotice the use of the annotation

#[actix_rt::main]mainactix_rtmainactixactix_rt::mainmainHttpServerApplocalhostApphandlersThe next step is to write the

handlershandlers.rstouch src/handlers.rs

We can then paste the following code in that file, which should look like:

// src/handlers.rs use actix_web::Responder; pub async fn get_users() -> impl Responder { format!("hello from get users") } pub async fn get_user_by_id() -> impl Responder { format!("hello from get users by id") } pub async fn add_user() -> impl Responder { format!("hello from add user") } pub async fn delete_user() -> impl Responder { format!("hello from delete user") }

As expected, we have four handler functions for our four routes. Each of those are designated as

asyncResponderLet’s run the project using

cargocargo run Finished dev [unoptimized + debuginfo] target(s) in 0.49s Running `target/debug/actix-diesel-auth`

In another terminal, we can use curl to access the API once it's done compiling

curl 127.0.0.1:8080/users hello from get users curl -X POST 127.0.0.1:8080/users hello from add user

Connecting with a Postgres Database

The most popular framework for working with database interactions from Rust applications is Diesel, which provides a type-safe abstraction over SQL. We will use Diesel to connect our API to a backing Postgres database. We will use another crate called

R2D2Let us modify the

main.rs// src/main.rs #[macro_use] extern crate diesel; use actix_web::{dev::ServiceRequest, web, App, Error, HttpServer}; use diesel::prelude::*; use diesel::r2d2::{self, ConnectionManager};

We will use separate modules for functionalities to be able to maintain a clean separation of concern. Thus, we will need to declare those modules in

main.rs// src/main.rs mod errors; mod handlers; mod models; mod schema;

We then define a custom type for the connection pool. This step is purely for convenience. If we do not do this, we will need to use the complete type signature later.

// src/main.rs pub type Pool = r2d2::Pool<ConnectionManager<PgConnection>>;

We can now move on to our

main// src/main.rs // dependencies here // module declarations here // type declarations here #[actix_rt::main] async fn main() -> std::io::Result<()> { dotenv::dotenv().ok(); std::env::set_var("RUST_LOG", "actix_web=debug"); let database_url = std::env::var("DATABASE_URL").expect("DATABASE_URL must be set"); // create db connection pool let manager = ConnectionManager::<PgConnection>::new(database_url); let pool: Pool = r2d2::Pool::builder() .build(manager) .expect("Failed to create pool."); // Start http server HttpServer::new(move || { App::new() .data(pool.clone()) .route("/users", web::get().to(handlers::get_users)) .route("/users/{id}", web::get().to(handlers::get_user_by_id)) .route("/users", web::post().to(handlers::add_user)) .route("/users/{id}", web::delete().to(handlers::delete_user)) }) .bind("127.0.0.1:8080")? .run() .await }

The most important change since the previous version is passing in the database connection pool to each of the handlers via a

.data(pool.clone())DATABASE_URLWe will use a file named

.envtouch .env

The next step is to put our environment variable named

DATABASE_URLcat .env DATABASE_URL=postgres://username:password@localhost/auth0_demo?sslmode=disable

Note > Note Make sure you have PostgreSQL installed before running the next command. This is a great resource for setting up PostgreSQL on Mac.

Diesel needs its own setup steps. For that, we will need to start with installing the

dieselcargo install diesel_cli --no-default-features --features postgres

Note > If you run into an error here, make sure you've added Cargo's bin directory in your system's

PATHsource $HOME/.cargo/envHere we tell

dieselpostgresdiesel setup

This command will generate the database named

auth0_demomigrationsdiesel.toml# For documentation on how to configure this file, # see diesel.rs/guides/configuring-diesel-cli [print_schema] file = "src/schema.rs"

This file can be used to configure diesel's behavior. In our case, we use it to tell diesel where to write the schema file when we run the

print-schemadieselThe next step now is to add our migrations using the CLI:

diesel migration generate add_users

This will create a new directory in the

migrations2019-10-30-141014_add_usersup.sqldown.sqlup.sqlCREATE TABLE users ( id SERIAL NOT NULL PRIMARY KEY, first_name TEXT NOT NULL, last_name TEXT NOT NULL, email TEXT NOT NULL, created_at TIMESTAMP NOT NULL );

The other file is used when

dieselup.sql-- This file should undo anything in `up.sql` DROP TABLE users;

Having done all that, we are at a position we can define our model and schema. For our case, the model is in a file called

models.rssrc// src/models.rs use crate::schema::*; use serde::{Deserialize, Serialize}; #[derive(Debug, Serialize, Deserialize, Queryable)] pub struct User { pub id: i32, pub first_name: String, pub last_name: String, pub email: String, pub created_at: chrono::NaiveDateTime, }

Our

Userstruct// src/models.rs #[derive(Insertable, Debug)] #[table_name = "users"] pub struct NewUser<'a> { pub first_name: &'a str, pub last_name: &'a str, pub email: &'a str, pub created_at: chrono::NaiveDateTime, }

We use different structures to represent input to the database,

NewUserusersidUserstructNewUserInsertableUserQueryableWith this information,

dieselschema.rsdiesel print-schema > src/schema.rs

In our case, the schema file looks like this:

// src/schema.rs table! { users (id) { id -> Int4, first_name -> Text, last_name -> Text, email -> Text, created_at -> Timestamp, } }

We can now move on to our handlers where we will add necessary functionality to interact with the database. Like the previous cases, we start with declaring our dependencies:

// src/handlers.rs use super::models::{NewUser, User}; use super::schema::users::dsl::*; use super::Pool; use crate::diesel::QueryDsl; use crate::diesel::RunQueryDsl; use actix_web::{web, Error, HttpResponse}; use diesel::dsl::{delete, insert_into}; use serde::{Deserialize, Serialize}; use std::vec::Vec;

We define a new struct here to represent a user as input JSON to our API. Notice that this struct does not have the

idcreated_athandlers.rs// src/handlers.rs #[derive(Debug, Serialize, Deserialize)] pub struct InputUser { pub first_name: String, pub last_name: String, pub email: String, }

We can now write the individual handlers, starting with

GET /users// src/handlers.rs // dependencies here // Handler for GET /users pub async fn get_users(db: web::Data<Pool>) -> Result<HttpResponse, Error> { Ok(web::block(move || get_all_users(db)) .await .map(|user| HttpResponse::Ok().json(user)) .map_err(|_| HttpResponse::InternalServerError())?) } fn get_all_users(pool: web::Data<Pool>) -> Result<Vec<User>, diesel::result::Error> { let conn = pool.get().unwrap(); let items = users.load::<User>(&conn)?; Ok(items) }

We have moved all database interactions to a helper function to keep the code cleaner. In our handler, we block on the helper function and return the results if there were no errors. In case of an error, we return a

InternalServerErrorResultThe rest of the handlers are similar in construction.

// src/handlers.rs // dependencies here // Handler for GET /users/{id} pub async fn get_user_by_id( db: web::Data<Pool>, user_id: web::Path<i32>, ) -> Result<HttpResponse, Error> { Ok( web::block(move || db_get_user_by_id(db, user_id.into_inner())) .await .map(|user| HttpResponse::Ok().json(user)) .map_err(|_| HttpResponse::InternalServerError())?, ) } // Handler for POST /users pub async fn add_user( db: web::Data<Pool>, item: web::Json<InputUser>, ) -> Result<HttpResponse, Error> { Ok(web::block(move || add_single_user(db, item)) .await .map(|user| HttpResponse::Created().json(user)) .map_err(|_| HttpResponse::InternalServerError())?) } // Handler for DELETE /users/{id} pub async fn delete_user( db: web::Data<Pool>, user_id: web::Path<i32>, ) -> Result<HttpResponse, Error> { Ok( web::block(move || delete_single_user(db, user_id.into_inner())) .await .map(|user| HttpResponse::Ok().json(user)) .map_err(|_| HttpResponse::InternalServerError())?, ) } fn db_get_user_by_id(pool: web::Data<Pool>, user_id: i32) -> Result<User, diesel::result::Error> { let conn = pool.get().unwrap(); users.find(user_id).get_result::<User>(&conn) } fn add_single_user( db: web::Data<Pool>, item: web::Json<InputUser>, ) -> Result<User, diesel::result::Error> { let conn = db.get().unwrap(); let new_user = NewUser { first_name: &item.first_name, last_name: &item.last_name, email: &item.email, created_at: chrono::Local::now().naive_local(), }; let res = insert_into(users).values(&new_user).get_result(&conn)?; Ok(res) } fn delete_single_user(db: web::Data<Pool>, user_id: i32) -> Result<usize, diesel::result::Error> { let conn = db.get().unwrap(); let count = delete(users.find(user_id)).execute(&conn)?; Ok(count) }

Lastly, we will need to implement our custom errors in a new file,

src/errors.rs// src/errors.rs use actix_web::{error::ResponseError, HttpResponse}; use derive_more::Display; #[derive(Debug, Display)] pub enum ServiceError { #[display(fmt = "Internal Server Error")] InternalServerError, #[display(fmt = "BadRequest: {}", _0)] BadRequest(String), #[display(fmt = "JWKSFetchError")] JWKSFetchError, } // impl ResponseError trait allows to convert our errors into http responses with appropriate data impl ResponseError for ServiceError { fn error_response(&self) -> HttpResponse { match self { ServiceError::InternalServerError => { HttpResponse::InternalServerError().json("Internal Server Error, Please try later") } ServiceError::BadRequest(ref message) => HttpResponse::BadRequest().json(message), ServiceError::JWKSFetchError => { HttpResponse::InternalServerError().json("Could not fetch JWKS") } } } }

At the top level, we have a generic

ServiceErrorServiceError::InternalServerErrorServiceError::BadRequestServiceError::JWKSFetchErrorResponseErrorWe have changed our handlers to return a

ResultHttpResponseErrorHttpResponseHaving setup our application, navigate to the project directory in a terminal window. We will then apply our database migration using the following command:

diesel migration run

Now we are ready to run our application using the following command:

cargo run

We should now be able to interact with this API again using

curlcurl -v -H "Content-Type: application/json" -X POST -d '{"first_name": "foo1", "last_name": "bar1", "email": "foo1@bar.com"}' 127.0.0.1:8080/users * Trying 127.0.0.1... * TCP_NODELAY set * Connected to 127.0.0.1 (127.0.0.1) port 8080 (#0) > POST /users HTTP/1.1 > Host: 127.0.0.1:8080 > User-Agent: curl/7.64.1 > Accept: */* > < HTTP/1.1 200 OK < content-length: 229 < content-type: application/json < date: Mon, 13 Jan 2020 11:03:37 GMT < * Connection #0 to host 127.0.0.1 left intact {"id":10,"first_name":"foo1","last_name":"bar1","email":"foo1@bar.com","created_at":"2019-10-31T11:20:58.710236"}* Closing connection 0 curl -v 127.0.0.1:8080/users * Trying 127.0.0.1... * TCP_NODELAY set * Connected to 127.0.0.1 (127.0.0.1) port 8080 (#0) > GET /users HTTP/1.1 > Host: 127.0.0.1:8080 > User-Agent: curl/7.64.1 > Accept: */* > < HTTP/1.1 200 OK < content-length: 229 < content-type: application/json < date: Mon, 13 Jan 2020 11:10:10 GMT < * Connection #0 to host 127.0.0.1 left intact [{"id":10,"first_name":"foo1","last_name":"bar1","email":"foo1@bar.com","created_at":"2019-10-31T11:20:58.710236"}]* Closing connection 0

Securing the API

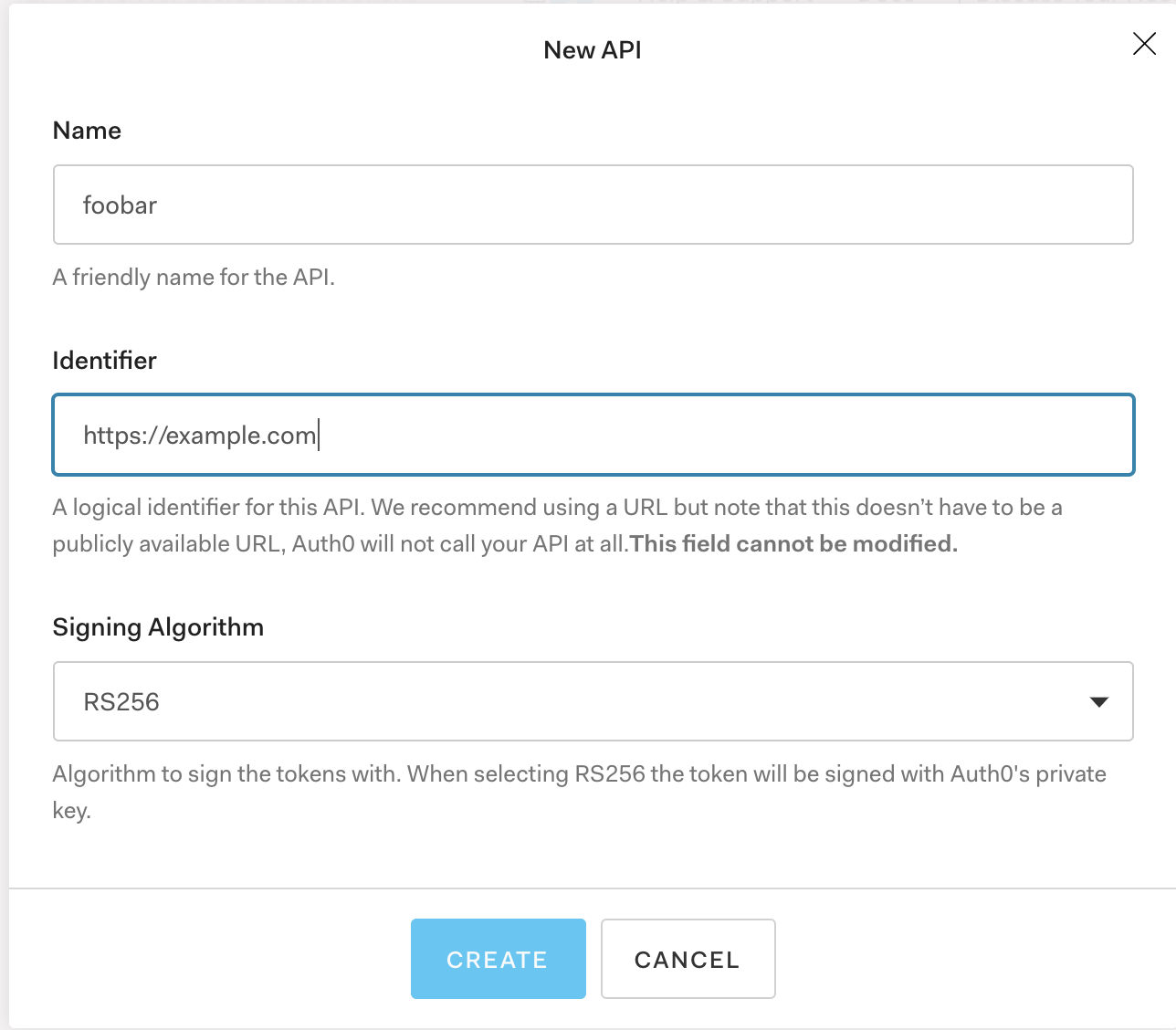

The next step now is to implement JWT based authentication for our API. We will use Auth0 as our authentication provider. Let's start with creating a new Auth0 tenant. First, sign up for a free Auth0 account, click on "Create Application", choose a name, select "Regular web application", and press "Create"." The next step is to create a new API for our application. Click on "APIs", then "Create API", choose a name and a domain identifier and click create.

Try out the most powerful authentication platform for free.

Get started →Please keep this tab open in your browser since we will need the authentication token from here later. Since we want to implement bearer-based authentication, we will send this token in an Authorization header.

In our code, we will use another supporting crate,

actix_web_httpauth401// src/main.rs use actix_web_httpauth::extractors::bearer::{BearerAuth, Config}; use actix_web_httpauth::extractors::AuthenticationError; use actix_web_httpauth::middleware::HttpAuthentication; async fn validator(req: ServiceRequest, credentials: BearerAuth) -> Result<ServiceRequest, Error> { let config = req .app_data::<Config>() .map(|data| data.get_ref().clone()) .unwrap_or_else(Default::default); match auth::validate_token(credentials.token()) { Ok(res) => { if res == true { Ok(req) } else { Err(AuthenticationError::from(config).into()) } } Err(_) => Err(AuthenticationError::from(config).into()), } }

Our

main// src/main.rs // dependencies here mod auth; HttpServer::new(move || { let auth = HttpAuthentication::bearer(validator); App::new() .wrap(auth) .data(pool.clone()) .route("/users", web::get().to(handlers::get_users)) .route("/users/{id}", web::get().to(handlers::get_user_by_id)) .route("/users", web::post().to(handlers::add_user)) .route("/users/{id}", web::delete().to(handlers::delete_user)) }) .bind("127.0.0.1:8080")? .run() .await

We delegate actual token validation to a helper function in a module called

authsrc/auth.rs// src/auth.rs use crate::errors::ServiceError; use alcoholic_jwt::{token_kid, validate, Validation, JWKS}; use serde::{Deserialize, Serialize}; use std::error::Error; #[derive(Debug, Serialize, Deserialize)] struct Claims { sub: String, company: String, exp: usize, } pub fn validate_token(token: &str) -> Result<bool, ServiceError> { let authority = std::env::var("AUTHORITY").expect("AUTHORITY must be set"); let jwks = fetch_jwks(&format!("{}{}", authority.as_str(), ".well-known/jwks.json")) .expect("failed to fetch jwks"); let validations = vec![Validation::Issuer(authority), Validation::SubjectPresent]; let kid = match token_kid(&token) { Ok(res) => res.expect("failed to decode kid"), Err(_) => return Err(ServiceError::JWKSFetchError), }; let jwk = jwks.find(&kid).expect("Specified key not found in set"); let res = validate(token, jwk, validations); Ok(res.is_ok()) }

The

validate_tokenServiceErrorreqwestGETPaste the following function below

validate_token// src/auth.rs fn fetch_jwks(uri: &str) -> Result<JWKS, Box<dyn Error>> { let mut res = reqwest::get(uri)?; let val = res.json::<JWKS>()?; return Ok(val); }

Before we start, we will need to add another environment variable to our environments file. This will represent the domain we want to validate our token against. Any token that is not issued by this domain should fail validation. In our validation function, we get that domain and fetch the set of keys to validate our token from Auth0. We then use another crate called

for the actual validation. Finally, we return a boolean indicating the validation result.alcoholic_jwt

Here is how the

.env// .env DATABASE_URL=postgres://localhost/auth0_demo?sslmode=disable AUTHORITY=https://example.com/

Note the trailing slash at the end of the URL in

AUTHORITYcurlcurl -v 127.0.0.1:8080/users * Trying 127.0.0.1... * TCP_NODELAY set * Connected to 127.0.0.1 (127.0.0.1) port 8080 (#0) > GET /users HTTP/1.1 > Host: 127.0.0.1:8080 > User-Agent: curl/7.64.1 > Accept: */* > < HTTP/1.1 401 Unauthorized < content-length: 0 < www-authenticate: Bearer < date: Mon, 13 Jan 2020 11:59:21 GMT < * Connection #0 to host 127.0.0.1 left intact * Closing connection 0

As expected, this failed with a

401 UnauthorizedTOKEN200Then and set it to an environment variable, as shown below:

export TOKEN=yourtoken curl -H "Authorization: Bearer $TOKEN" -v 127.0.0.1:8080/users * Trying 127.0.0.1... * TCP_NODELAY set * Connected to 127.0.0.1 (127.0.0.1) port 8080 (#0) > GET /users HTTP/1.1 > Host: 127.0.0.1:8080 > User-Agent: curl/7.64.1 > Accept: */* > Authorization: Bearer **** > < HTTP/1.1 200 OK < content-length: 229 < content-type: application/json < date: Mon, 13 Jan 2020 12:00:58 GMT < * Connection #0 to host 127.0.0.1 left intact [{"id":10,"first_name":"foo1","last_name":"bar1","email":"foo1@bar.com","created_at":"2019-10-31T11:20:58.710236"},{"id":11,"first_name":"foo2","last_name":"bar2","email":"foo1@bar.com","created_at":"2020-01-13T11:03:29.489640"}]* Closing connection 0

Summary

In this article, we wrote a simple CRUD API based on actix-web using Rust. We implemented authentication using Auth0 and some simple token validation. This should give you a good start at implementing your own APIs based on actix. The sample code is located here. Please let me know if you have any questions in the comments below.

About the author

Abhishek Chanda

Senior Systems Engineer