TL;DR: In today's fast-moving, information-rich world, it is becoming more necessary to build applications that are intelligent in the way they process the data they are fed. Artificial Intelligence is quickly becoming an essential tool in software development. In this article, we will look at the ML Kit mobile SDK that brings all the Google’s expertise on machine learning techniques to mobile developers in an easy-to-use package. We will look at the various APIs offered by the SDK, and then we will take one of the APIs on a test drive by creating an Android application that makes use of it. You can find the code for the application in this GitHub repository.

“Google I/O 2018 enabled @Android developers to take advantage of some cool #MachineLearning APIs. Learn what are the new APIs and create a simple app that recognizes objects on images.”

Tweet This

Introduction

In today's information-rich world, people have come to expect their technology to be smart. We are seeing the increased adoption of Artificial Intelligence(AI) in the development of intelligent software. AI is quickly becoming an essential tool in software development.

Luckily for developers, there are various services that make it easier and faster to add Artificial Intelligence to apps without needing much experience in the field. There has been a growing number of AI-related APIs in the market such as Amazon's AWS Machine Learning APIs, IBM Watson and Google Cloud AI. In this article, we'll take a look at the ML Kit mobile SDK that was introduced at this year's Google IO.

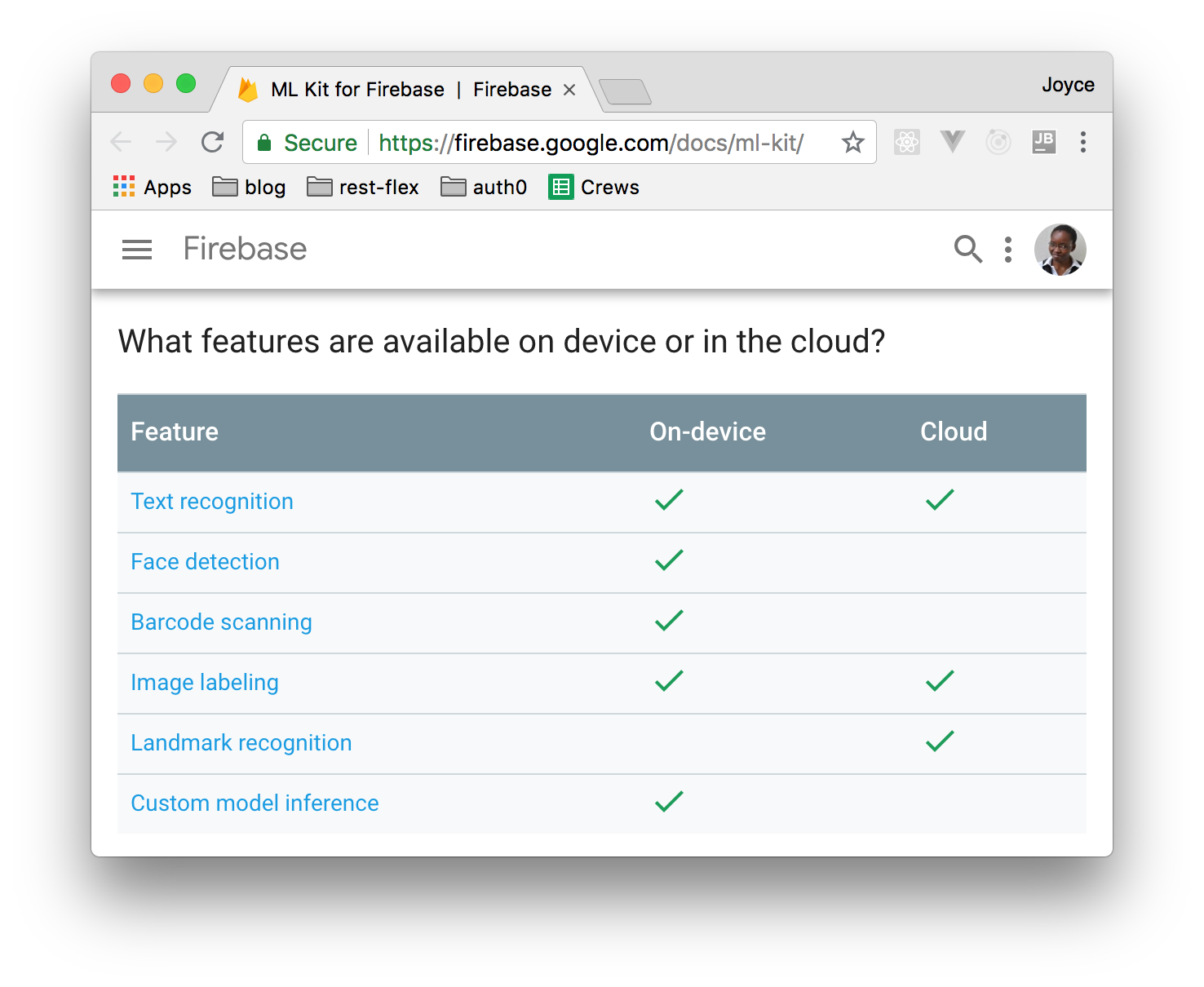

ML Kit is a mobile SDK that enables you to add powerful machine learning features to a mobile application. It supports both Android and iOS and offers the same features for both platforms. The SDK is part of Firebase and bundles together various machine learning technologies from Google such as the Cloud Vision API, Android Neural Network API and TensorFlow Lite. This SDK comes with a set of ready-to-use APIs for common mobile use cases such as face detection, text recognition, barcode scanning, image labeling and landmark recognition. These are offered as either on-device or cloud APIs. On-device APIs have the advantage of being able to process data quickly, they are free to use and they don't require a network connection to work. The cloud-based APIs give a higher level of accuracy as they are able to leverage the power of Google Cloud Platform's machine learning technologies. All cloud-based APIs are premium services, with a free quota in place.

In this article, we'll briefly go over what each of the ML Kit APIs offers before taking a look at how to use one of the APIs in an Android application.

Text Recognition with ML Kit SDK

With the text recognition API, your app can recognize text in any Latin-based language (and more when using the Cloud-based API). This can have such use cases as automating data entry from physical records to digital format, providing better accessibility where apps can identify text in images and read it out to users, organize photos based on their text content, e.t.c.

Text recognition is available both as an on-device and cloud-based API. The on-device API provides real-time processing (ideal for a camera or video feed) while the cloud-based one provides higher accuracy text recognition and is able to identify a broader range of languages and special characters.

Face Detection with ML Kit SDK

The face detection API can detect human faces in visual media (digital images and video). Given an image, the API returns the position, size and orientation (the angle the face is oriented with respect to the camera) of any detected faces. For each detected face, you can also get landmark and classification information. Landmarks are points of interest within a face such as right eye, left eye, nose base, bottom mouth, e.t.c. Classification determines whether the face displays certain facial characteristics. ML Kit currently supports two classifications: eyes open and smiling. The API is available on-device.

“@Android developers can now detect faces with ease using the new #MachineLearning SDK introduced by Google.”

Tweet This

Barcode Scanning with ML Kit SDK

With the barcode scanning API, your app can read data encoded using most standard barcode formats. It is available on-device and supports the following barcode formats:

- 1D barcodes: EAN-13, EAN-8, UPC-A, UPC-E, Code-39, Code-93, Code-128, ITF, Codabar

2D barcodes: QR Code, Data Matrix, PDF-417, AZTEC

The Barcode Scanning API automatically parses structured data stored using one of the supported 2D formats. Supported information types include:

- URLs

- Contact information (VCARD, etc.)

- Calendar events

- Email addresses

- Phone numbers

- SMS message prompts

- ISBNs

- WiFi connection information

- Geo-location (latitude and longitude)

AAMVA-standard driver information (license/ID)

Image Labeling with ML Kit SDK

The image labeling API can recognize entities in an image. When used, the API returns a list of recognized entities, each with a score indicating the confidence the ML model has in its relevance. The API can be used for such tasks as automatic metadata generation and content moderation.

Image labeling is available both as an on-device and cloud-based API. The device-based API supports 400+ labels that cover the most commonly found concepts in photos (see examples) while the cloud-based API supports 10,000+ labels (see examples).

Landmark Recognition with ML Kit SDK

The landmark recognition API can recognize well-known landmarks in an image. When given an image, the API returns landmarks that were recognized, coordinates of the position of each landmark in the image and each landmark's geographic coordinates. The API can be used to generate metadata for images or to customize some features according to the content a user shares. Landmark recognition is only available as a cloud-based API.

Using Custom Models with ML Kit SDK

If you are an experienced machine learning engineer and would prefer not to use the pre-built ML Kit models, you can use your own custom TensorFlow Lite models with ML Kit. The models can either be hosted on Firebase or they can be bundled with the app. Hosting the model on Firebase reduces your app's binary size while also ensuring that the app is always working with the most up-to-date version of the model. Storing the model locally on the device makes for faster processing. You can choose to support both on-device and cloud-hosted models in your app. By using both, you make the most recent version of the model available to your app while also ensuring that the app's ML features are always functional even if the Firebase-hosted model is unavailable (perhaps due to network issues).

Upcoming APIs

When ML Kit was released, Google also announced its plans of releasing two more APIs in the near future. These are the Smart Reply and Face Contour APIs.

The Smart Reply API will allow you to support contextual messaging replies in your app. The API will provide suggested text snippets that fit the context of messages it is sent, similar to the suggested-response feature we see in the Android Messages app.

The Face Contour API will be an addition to the Face Detection API. It will provide a high-density face contour. This will enable you to perform much more precise operations on faces than you can with the Face Detection API. To see a preview of the API in use, you can take a look at this YouTube video.

Summary of On-Device and In-Cloud Features

Image Labeling in an Android App

To see one of the APIs in action, we will create an application that uses the Image Labeling API to identify the contents of an image. The APIs share some similarities when it comes to integration, so knowing how to use one can help you understand how to implement the others.

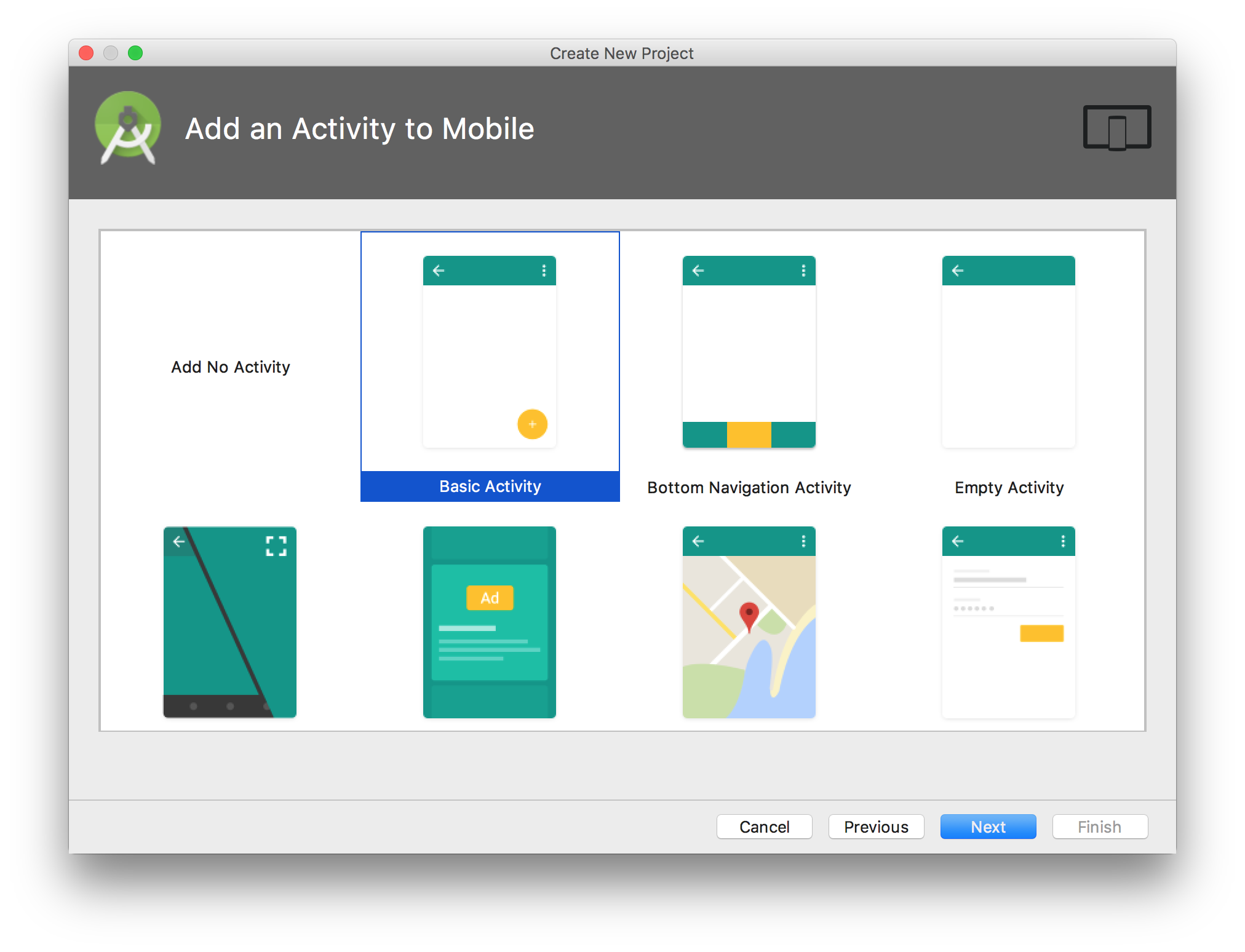

To get started, create a new project in Android Studio. Give your application a name; I named mine

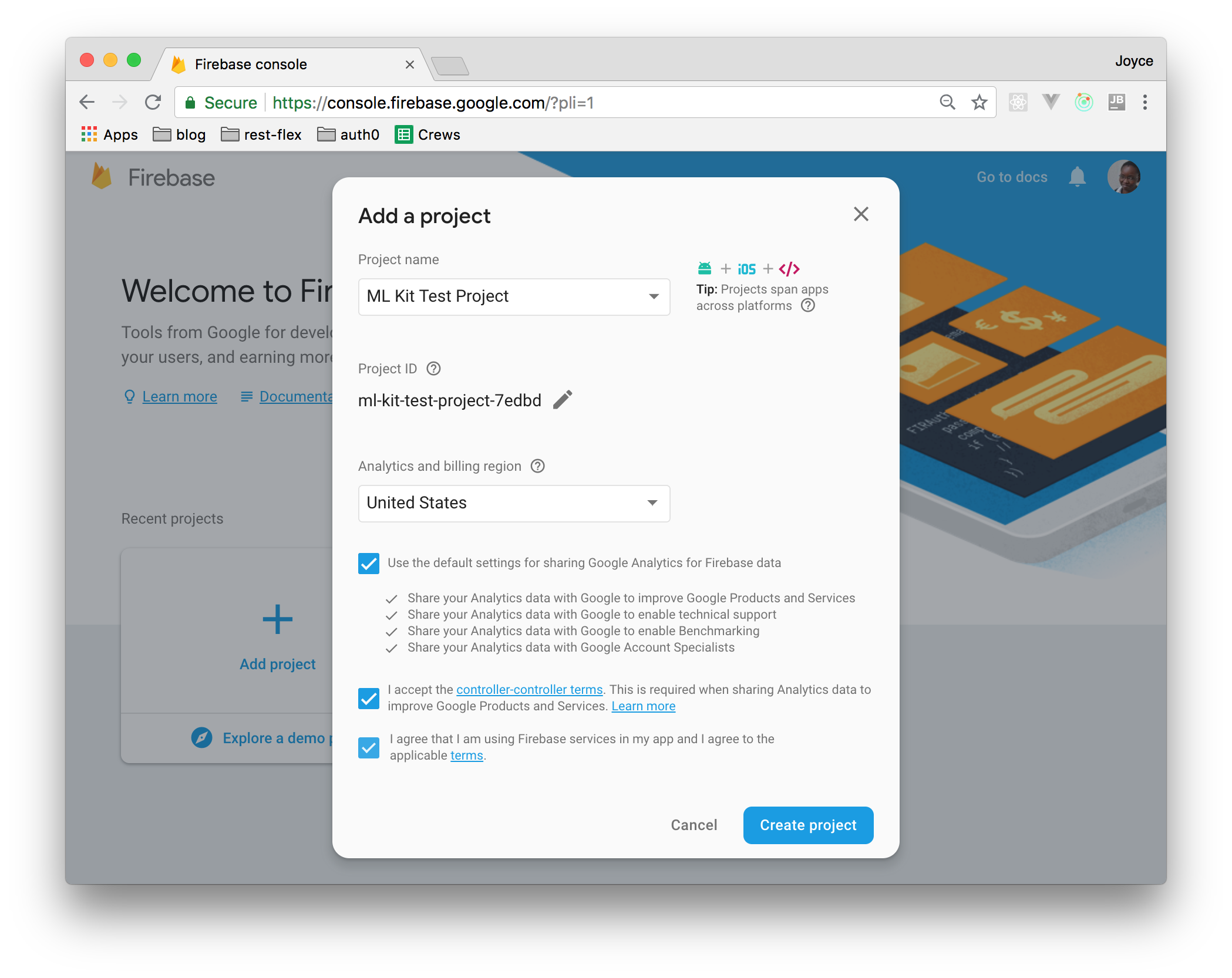

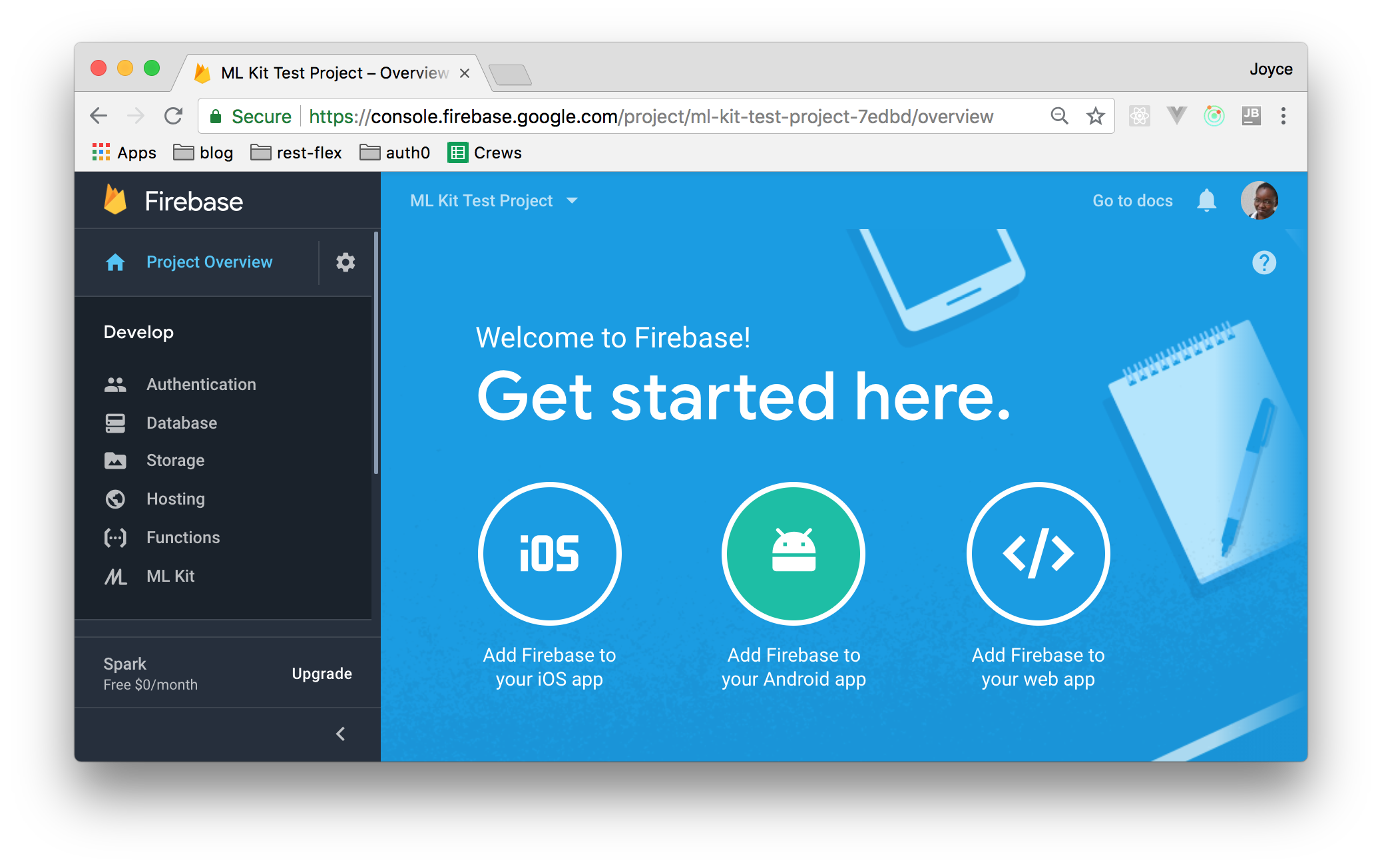

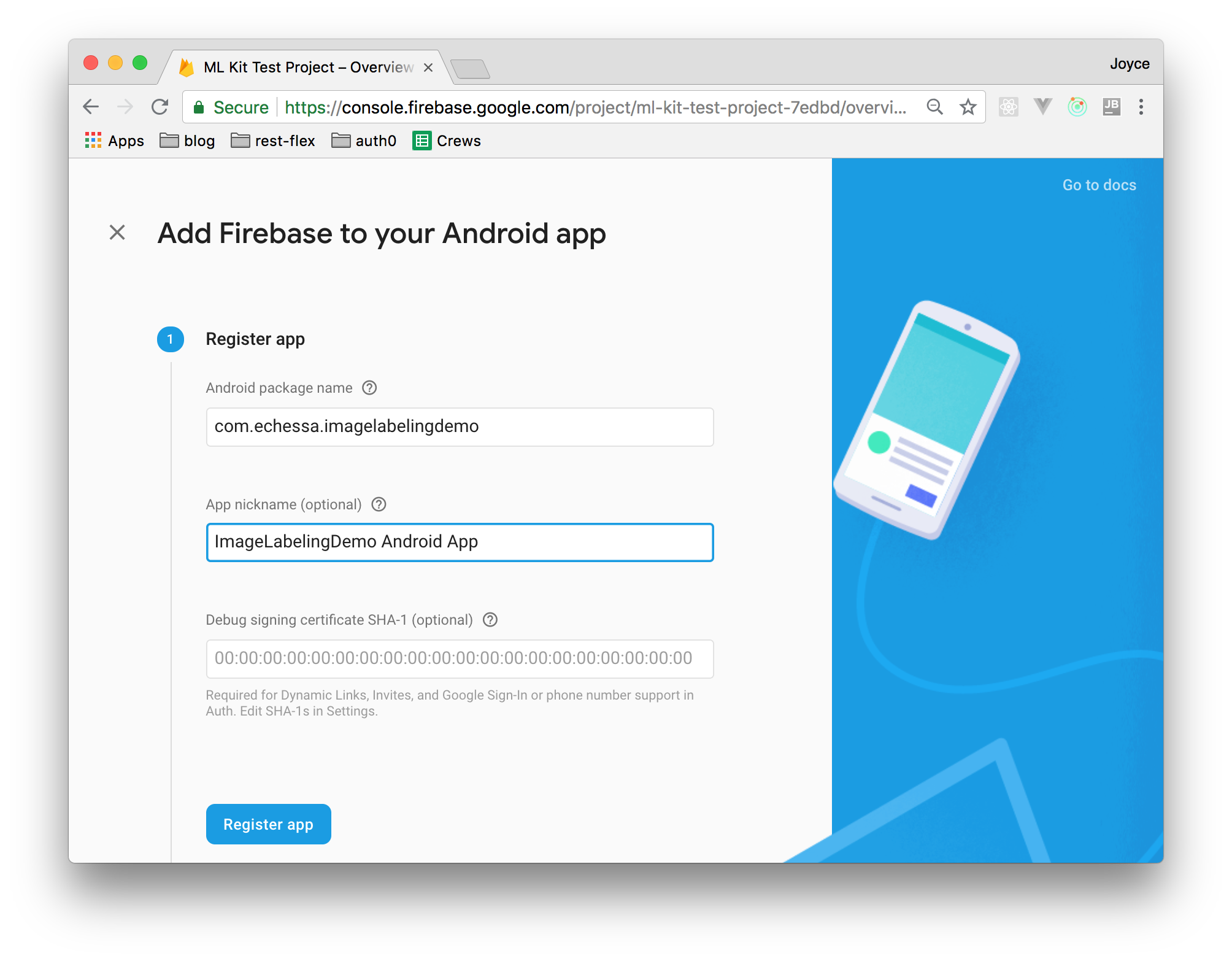

ImageLabelingDemoBasic ActivityMainActivityTo add Firebase to your app, first, create a Firebase project in the Firebase console.

On the dashboard, select

Add Firebase to your Android appFill out the provided form with your app's details. For this project, you only need to provide a package name (you can find this in your Android project's

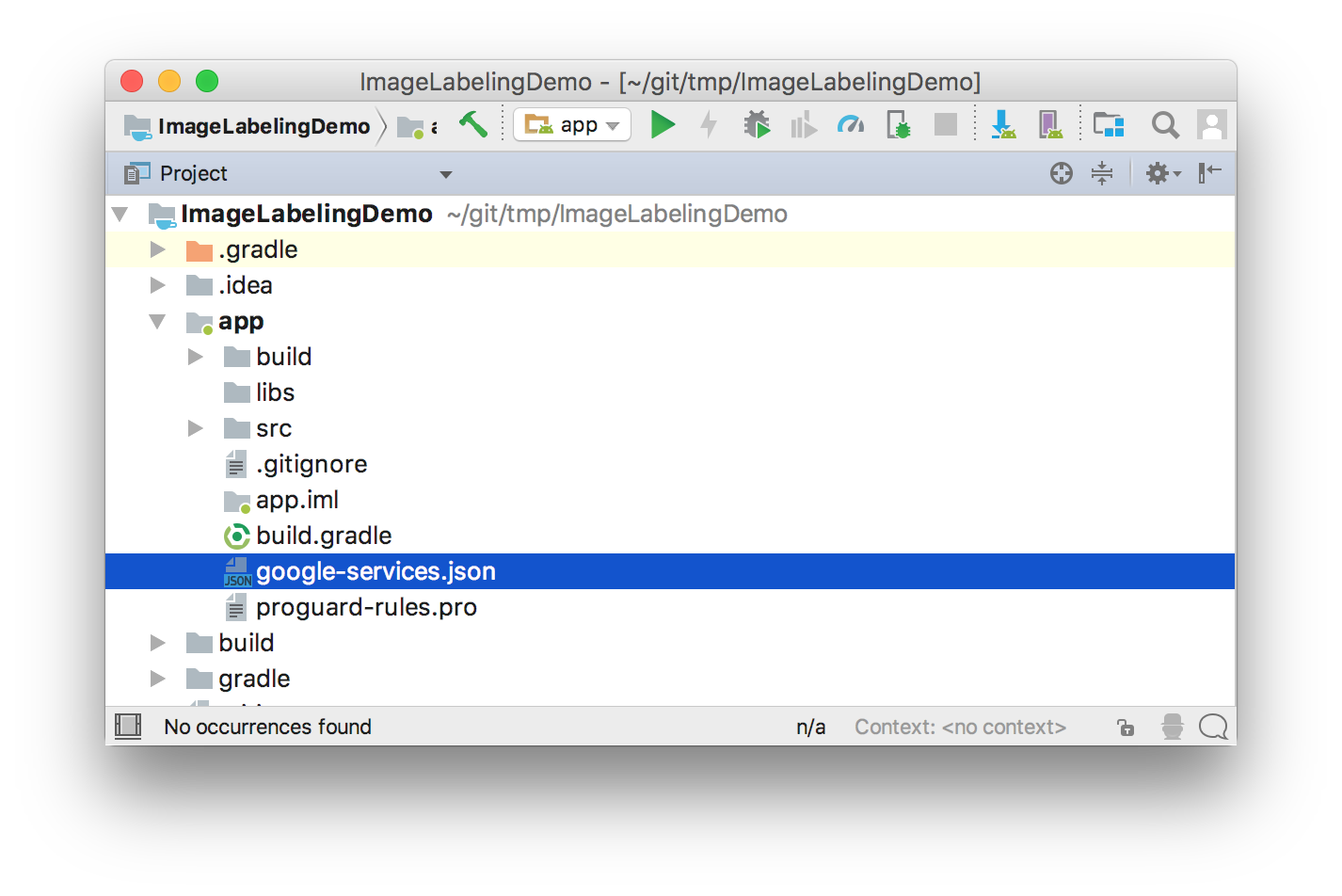

AndroidManifest.xmlAfter the app has been registered, you will see a button you can use to download a config file named

google-services.jsonThe Google services plugin for Gradle loads the

google-services.jsonbuild.gradledependenciesbuildscript { // ... repositories ... dependencies { classpath 'com.android.tools.build:gradle:3.1.3' classpath 'com.google.gms:google-services:4.0.1' } } // ... allprojects, task clean ...

Next, add the following dependencies to the App-level

build.gradle/app/build.gradle// ... apply & android ... dependencies { implementation 'com.google.firebase:firebase-core:16.0.1' implementation 'com.google.firebase:firebase-ml-vision:16.0.0' implementation 'com.google.firebase:firebase-ml-vision-image-label-model:15.0.0' // ... other dependencies ... }

Then add the following to the bottom of the same file (right after dependencies) and press 'Sync now' in the bar that appears in the IDE.

apply plugin: 'com.google.gms.google-services'

Open up the

strings.xml<string name="action_process">Process</string> <string name="storage_access_required">Storage access is required to enable selection of images</string> <string name="ok">OK</string> <string name="select_image">Select an image for processing</string>

In

menu_main.xml<item android:id="@+id/action_process" android:title="@string/action_process" app:showAsAction="ifRoom" />

The app will allow the user to select an image on their phone and process it with the ML Kit library. To load images, it will require permission to read the phone's storage. Add the following permission to your

AndroidManifest.xml<uses-permission android:name="android.permission.READ_EXTERNAL_STORAGE"/>

Also, add the following to the manifest file inside

application<meta-data android:name="com.google.firebase.ml.vision.DEPENDENCIES" android:value="label" />

The above code is optional but recommended to add to your manifest file if your app will use any of the on-device APIs. With the above configuration, the app will automatically download the ML model(s) specified by

android:valueandroid:value="ocr,face,barcode,labelReplace the content of

content_main.xml<?xml version="1.0" encoding="utf-8"?> <LinearLayout xmlns:android="http://schemas.android.com/apk/res/android" xmlns:app="http://schemas.android.com/apk/res-auto" xmlns:tools="http://schemas.android.com/tools" android:layout_width="match_parent" android:layout_height="match_parent" android:orientation="vertical" app:layout_behavior="@string/appbar_scrolling_view_behavior" tools:context=".MainActivity" tools:showIn="@layout/activity_main"> <ImageView android:id="@+id/imageView" android:layout_width="match_parent" android:layout_height="0dp" android:layout_weight="1" /> <TextView android:id="@+id/textView" android:layout_width="match_parent" android:layout_height="0dp" android:layout_margin="8dp" android:layout_weight="1"/> </LinearLayout>

In

activity_main.xmlandroid.support.design.widget.CoordinatorLayoutidandroid:id="@+id/main_layout"

Then change the icon of the FloatingActionButton from

ic_dialog_emailic_menu_galleryapp:srcCompat="@android:drawable/ic_menu_gallery"

Add the following variables to the

MainActivitypublic class MainActivity extends AppCompatActivity { private static final int SELECT_PHOTO_REQUEST_CODE = 100; private static final int ASK_PERMISSION_REQUEST_CODE = 101; private static final String TAG = MainActivity.class.getName(); private TextView mTextView; private ImageView mImageView; private View mLayout; private FirebaseVisionLabelDetector mDetector; // ... methods ... }

If you haven't done so, I recommend enabling Auto Import on Android Studio which will automatically import unambiguous libraries to the class as you add code that uses them. You can also refer to this file for a full list of libraries used in

MainActivityModify

onCreate()@Override protected void onCreate(Bundle savedInstanceState) { super.onCreate(savedInstanceState); setContentView(R.layout.activity_main); Toolbar toolbar = (Toolbar) findViewById(R.id.toolbar); setSupportActionBar(toolbar); mTextView = findViewById(R.id.textView); mImageView = findViewById(R.id.imageView); mLayout = findViewById(R.id.main_layout); FloatingActionButton fab = findViewById(R.id.fab); fab.setOnClickListener(new View.OnClickListener() { @Override public void onClick(View view) { checkPermissions(); } }); }

Here, we instantiate view objects in our layout and set a click listener on the FloatingActionButton. The user will use this button to select an image from their phone. The image will then be loaded onto the ImageView that we added to

content_main.xmlAdd the following two functions to the class.

public class MainActivity extends AppCompatActivity { // ... variables and methods ... private void checkPermissions() { if (ContextCompat.checkSelfPermission(MainActivity.this, Manifest.permission.READ_EXTERNAL_STORAGE) != PackageManager.PERMISSION_GRANTED) { // Permission not granted if (ActivityCompat.shouldShowRequestPermissionRationale(MainActivity.this, Manifest.permission.READ_EXTERNAL_STORAGE)) { // Show an explanation to the user of why permission is needed Snackbar.make(mLayout, R.string.storage_access_required, Snackbar.LENGTH_INDEFINITE).setAction(R.string.ok, new View.OnClickListener() { @Override public void onClick(View view) { // Request the permission ActivityCompat.requestPermissions(MainActivity.this, new String[]{Manifest.permission.READ_EXTERNAL_STORAGE}, ASK_PERMISSION_REQUEST_CODE); } }).show(); } else { // No explanation needed; request the permission ActivityCompat.requestPermissions(MainActivity.this, new String[]{Manifest.permission.READ_EXTERNAL_STORAGE}, ASK_PERMISSION_REQUEST_CODE); } } else { // Permission has already been granted openGallery(); } } private void openGallery() { Intent photoPickerIntent = new Intent(Intent.ACTION_PICK); photoPickerIntent.setType("image/*"); startActivityForResult(photoPickerIntent, SELECT_PHOTO_REQUEST_CODE); } }

In

checkPermissions()openGallery()shouldShowRequestPermissionRationale()truefalseIf the user had denied the permission and chosen the Don't ask again option, the Android system respects their request and doesn't show them the permission dialog again for that specific permission. In our code, we check for this and call

requestPermissions()onRequestPermissionsResult()PERMISSION_DENIEDAdd the following methods to

MainActivitypublic class MainActivity extends AppCompatActivity { // ... variables and methods ... @Override protected void onActivityResult(int requestCode, int resultCode, Intent data) { super.onActivityResult(requestCode, resultCode, data); if (requestCode == SELECT_PHOTO_REQUEST_CODE && resultCode == RESULT_OK && data != null && data.getData() != null) { Uri uri = data.getData(); try { Bitmap bitmap = MediaStore.Images.Media.getBitmap(getContentResolver(), uri); mImageView.setImageBitmap(bitmap); mTextView.setText(""); } catch (IOException e) { e.printStackTrace(); } } } @Override public void onRequestPermissionsResult(int requestCode, @NonNull String[] permissions, @NonNull int[] grantResults) { super.onRequestPermissionsResult(requestCode, permissions, grantResults); switch (requestCode) { case ASK_PERMISSION_REQUEST_CODE: { // If permission request is cancelled, grantResults array will be empty. if (grantResults.length > 0 && grantResults[0] == PackageManager.PERMISSION_GRANTED) { // Permission was granted openGallery(); } else { // Permission denied. Handle appropriately e.g. you can disable the // functionality that depends on the permission. } return; } } } }

In

openGallery()startActivityForResult()onActivityResult()onRequestPermissionsResult()ASK_PERMISSION_REQUEST_CODEopenGallery()After the user selects an image, we want them to be able to process it with ML Kit. In

menu_main.xmlelse ififonOptionsItemSelected()MainActivityprocessImage()if (id == R.id.action_settings) { return true; } else if (id == R.id.action_process) { processImage(); return true; }

Next, add the following to the class.

public class MainActivity extends AppCompatActivity { // ... variables and methods ... private void processImage() { if (mImageView.getDrawable() == null) { // ImageView has no image Snackbar.make(mLayout, R.string.select_image, Snackbar.LENGTH_SHORT).show(); } else { // ImageView contains image Bitmap bitmap = ((BitmapDrawable) mImageView.getDrawable()).getBitmap(); FirebaseVisionImage image = FirebaseVisionImage.fromBitmap(bitmap); mDetector = FirebaseVision.getInstance().getVisionLabelDetector(); mDetector.detectInImage(image) .addOnSuccessListener( new OnSuccessListener<List<FirebaseVisionLabel>>() { @Override public void onSuccess(List<FirebaseVisionLabel> labels) { // Task completed successfully StringBuilder sb = new StringBuilder(); for (FirebaseVisionLabel label : labels) { String text = label.getLabel(); String entityId = label.getEntityId(); float confidence = label.getConfidence(); sb.append("Label: " + text + "; Confidence: " + confidence + "; Entity ID: " + entityId + "\n"); } mTextView.setText(sb); } }) .addOnFailureListener( new OnFailureListener() { @Override public void onFailure(@NonNull Exception e) { // Task failed with an exception Log.e(TAG, "Image labelling failed " + e); } }); } } }

When

processImage()Before we can label the image, we first create a

FirebaseVisionImageBitmapmedia.ImageByteBufferWe then instantiate the

FirebaseVisionLabelDetectorFirebaseVisionLabelDetectorFirebaseVisionLabelFinally, we pass the image to the

detectInImage()If the labeling succeeds, a list of

FirebaseVisionLabelFirebaseVisionLabelWhen you are done with the detector or when it is clear that it will not be in use, you should close it to release the resources it is using. In our app, we can do this in

onPause()public class MainActivity extends AppCompatActivity { // ... variables and methods ... @Override protected void onPause() { super.onPause(); if (mDetector != null) { try { mDetector.close(); } catch (IOException e) { Log.e(TAG, "Exception thrown while trying to close Image Labeling Detector: " + e); } } } }

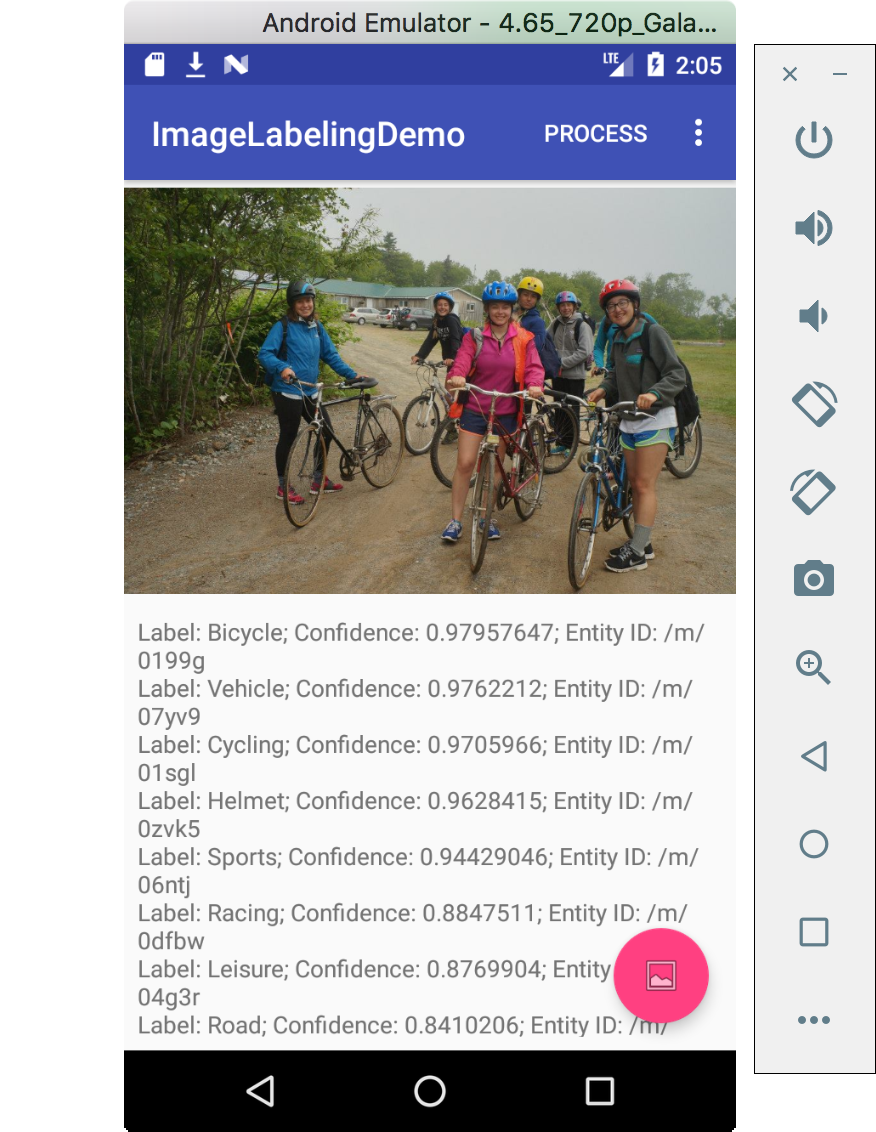

With that, you can now run the application.

On selecting an image and tapping on the Process button on the App Bar, you will be able to see the various labels and their corresponding confidence scores. If you are running the app on the emulator refer to this guide to add an image to the app's gallery.

By default, the on-device image labeler returns a maximum of 10 labels (returned labels can be less than 10). If you want to change this setting, you can set a confidence threshold on your detector. This is done with the

FirebaseVisionLabelDetectorOptions0.8FirebaseVisionLabelDetectorOptions options = new FirebaseVisionLabelDetectorOptions.Builder() .setConfidenceThreshold(0.8f) .build();

The

FirebaseVisionLabelDetectorOptionsFirebaseVisionLabelDetector detector = FirebaseVision.getInstance() .getVisionLabelDetector(options);

“I just built an @Android app that recognizes objects on images! #MachineLearning”

Tweet This

Aside: Securing Android Apps with Auth0

Securing applications with Auth0 is very easy and brings a lot of great features to the table. With Auth0, we only have to write a few lines of code to get solid identity management solution, single sign-on, support for social identity providers (like Facebook, GitHub, Twitter, etc.), and support for enterprise identity providers (Active Directory, LDAP, SAML, custom, etc.).

In the following sections, we are going to learn how to use Auth0 to secure Android apps. As we will see, the process is simple and fast.

Dependencies

To secure Android apps with Auth0, we just need to import the Auth0.Android library. This library is a toolkit that let us communicate with many of the basic Auth0 API functions in a neat way.

To import this library, we have to include the following dependency in our

build.gradledependencies { compile 'com.auth0.android:auth0:1.12.0' }

After that, we need to open our app's

AndroidManifest.xml<uses-permission android:name="android.permission.INTERNET" />

Create an Auth0 Application

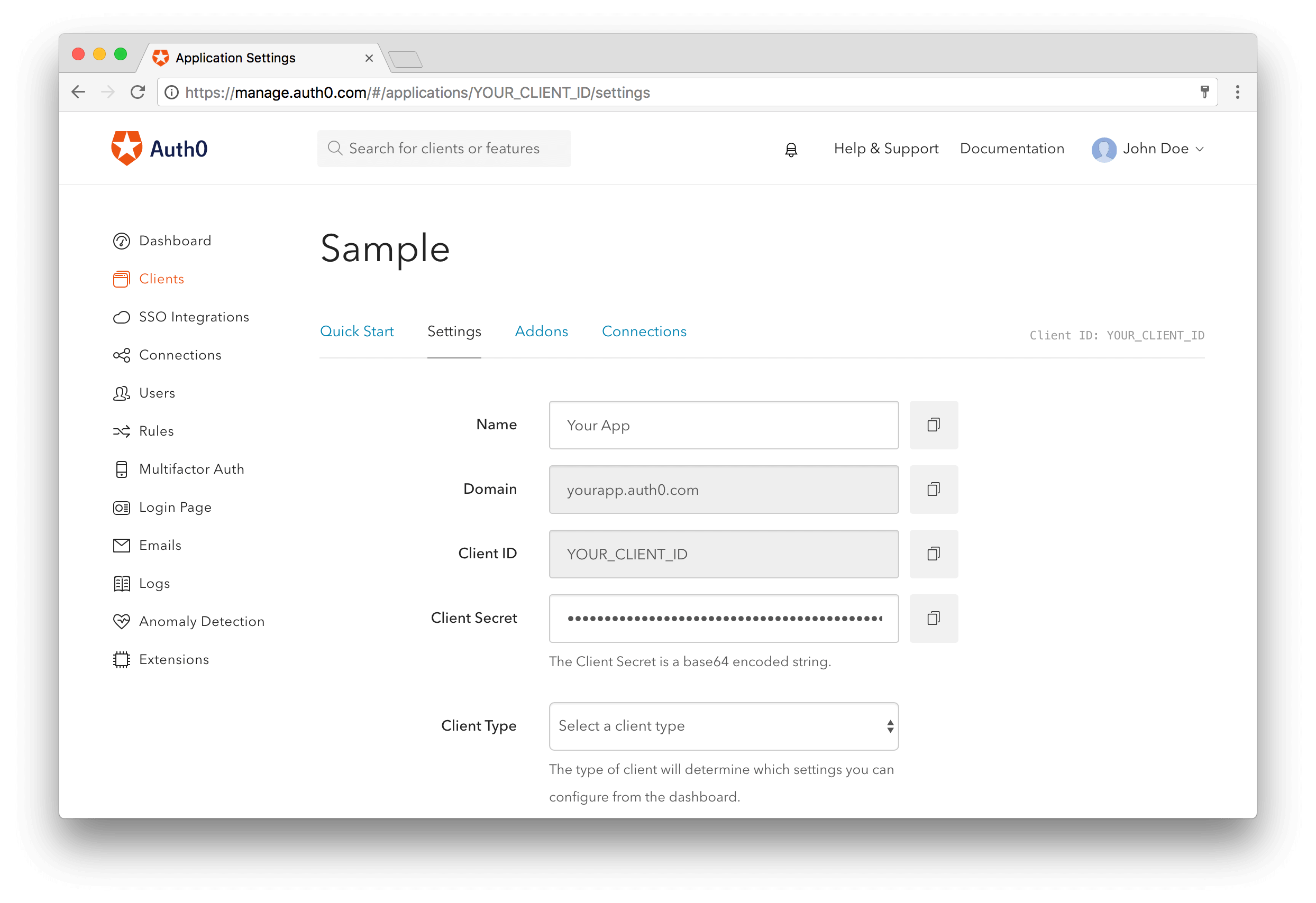

After importing the library and adding the permission, we need to register the application in our Auth0 dashboard. By the way, if we don't have an Auth0 account, this is a great time to create a free one .

In the Auth0 dashboard, we have to go to Applications and then click on the Create Application button. In the form that is shown, we have to define a name for the application and select the Native type for it. After that, we can hit the Create button. This will lead us to a screen similar to the following one:

On this screen, we have to configure a callback URL. This is a URL in our Android app where Auth0 redirects the user after they have authenticated.

We need to whitelist the callback URL for our Android app in the Allowed Callback URLs field in the Settings page of our Auth0 application. If we do not set any callback URL, our users will see a mismatch error when they log in.

demo://bkrebs.auth0.com/android/OUR_APP_PACKAGE_NAME/callback

Let's not forget to replace OURAPPPACKAGE_NAME with our Android application's package name. We can find this name in the

applicationIdapp/build.gradleSet Credentials

Our Android application needs some details from Auth0 to communicate with it. We can get these details from the Settings section for our Auth0 application in the Auth0 dashboard.

We need the following information:

- Client ID

- Domain

It's suggested that we do not hardcode these values as we may need to change them in the future. Instead, let's use String Resources, such as

@string/com_auth0_domainLet's edit our

res/values/strings.xml<resources> <string name="com_auth0_client_id">2qu4Cxt4h2x9In7Cj0s7Zg5FxhKpjooK</string> <string name="com_auth0_domain">bkrebs.auth0.com</string> </resources>

These values have to be replaced by those found in the Settings section of our Auth0 application.

Android Login

To implement the login functionality in our Android app, we need to add manifest placeholders required by the SDK. These placeholders are used internally to define an

intent-filterTo add the manifest placeholders, let's add the next line:

apply plugin: 'com.android.application' android { compileSdkVersion 25 buildToolsVersion "25.0.3" defaultConfig { applicationId "com.auth0.samples" minSdkVersion 15 targetSdkVersion 25 //... //---> Add the next line manifestPlaceholders = [auth0Domain: "@string/com_auth0_domain", auth0Scheme: "demo"] //<--- } }

After that, we have to run Sync Project with Gradle Files inside Android Studio or execute

./gradlew clean assembleDebugStart the Authentication Process

The Auth0 login page is the easiest way to set up authentication in our application. It's recommended using the Auth0 login page for the best experience, best security, and the fullest array of features.

Now we have to implement a method to start the authentication process. Let's call this method

loginMainActivityprivate void login() { Auth0 auth0 = new Auth0(this); auth0.setOIDCConformant(true); WebAuthProvider.init(auth0) .withScheme("demo") .withAudience(String.format("https://%s/userinfo", getString(R.string.com_auth0_domain))) .start(MainActivity.this, new AuthCallback() { @Override public void onFailure(@NonNull Dialog dialog) { // Show error Dialog to user } @Override public void onFailure(AuthenticationException exception) { // Show error to user } @Override public void onSuccess(@NonNull Credentials credentials) { // Store credentials // Navigate to your main activity } }); }

As we can see, we had to create a new instance of the Auth0 class to hold user credentials. We can use a constructor that receives an Android Context if we have added the following String resources:

R.string.com_auth0_client_idR.string.com_auth0_domain

If we prefer to hardcode the resources, we can use the constructor that receives both strings. Then, we can use the

WebAuthProviderAfter we call the

WebAuthProvider#startCapture the Result

After authentication, the browser redirects the user to our application with the authentication result. The SDK captures the result and parses it.

We do not need to declare a specific

for our activity because we have defined the manifest placeholders with we Auth0 Domain and Scheme values.intent-filter

The

AndroidManifest.xml<manifest xmlns:android="http://schemas.android.com/apk/res/android" package="com.auth0.samples"> <uses-permission android:name="android.permission.INTERNET" /> <application android:allowBackup="true" android:icon="@mipmap/ic_launcher" android:label="@string/app_name" android:theme="@style/AppTheme"> <activity android:name="com.auth0.samples.MainActivity"> <intent-filter> <action android:name="android.intent.action.MAIN" /> <category android:name="android.intent.category.LAUNCHER" /> </intent-filter> </activity> </application> </manifest>

That's it, we now have an Android application secured with Auth0. To learn more about this, we can check the official documentation. There, we will find more topics like Session Handling and Fetching User Profile.

Conclusion

In this article, we have looked at the Google ML Kit Mobile (the machine learning SDK) and all the features it offers. We've also looked at how to use one of its APIs in an Android application. To find out more about this SDK, you can watch this video from Google IO and you should also take a look at the documentation which covers all the APIs and shows their use on both Android and iOS. You can also take a look at the code in these apps, to see how the various APIs are implemented on Android and on iOS.