"It is possible to build a cabin with no foundations but not a lasting building"

— Eng. Isidor Goldreich (1906–1995)

We use cryptography daily without realizing it to protect our digital life. When unlocking our smartphones, making purchases online, or simply surfing the web, cryptography is the underlying technology protecting our private data, our identities, and our digital footprint. Cryptography is an integral part of the security process used to protect information in computing systems.

The security principles cryptosystems must ensure are data confidentiality, data authenticity, data integrity, and user authentication. Cryptography is often characterized as the strongest part of the security process. In theory, cryptography indeed provides strong security guarantees based on mathematical security models and proofs. Cryptographic implementations in practice are, however, brittle and, as such, are particularly prone to introduce vulnerabilities when they are not performed by non-experts. The knowledge of basic building blocks and principles, as well as their secure implementation as specified in standards and specifications, is therefore essential. In this post, we give a bird's-eye view of modern cryptography, its building blocks, and principles in order to paint a picture of the areas in which developers can develop a breadth of knowledge in order to implement secure cryptographic protocols.

Modern Cryptography

Encryption is the main application of cryptography. It consists in producing an unintelligible blob of data from actual data in order to ensure its confidentiality. As a process, it can be described as a set of encryption/decryption algorithms, with at least two parties who are trying to exchange some information over an insecure network. The encryption algorithm is referred to as the cipher, the unencrypted message is referred to as the plaintext, and the encrypted blob resulting from applying the cipher on the plaintext is the ciphertext. The encryption process uses the cipher along with a secret key to derive the ciphertext. Without knowing the key, no one — and certainly no attacker — should be able to decrypt the ciphertext to recover the initial plaintext. Cryptographic secrecy goes even further, requiring that not one bit of information should be uncovered about the plaintext from the ciphertext without knowing the key. This is known as Kerckhoffs's principle, which states that the security of an encryption algorithm resides in the secrecy of the encryption key. The entire encryption algorithm can therefore be public, and the security guarantees still hold as long as the secrecy of the encryption key is maintained.

In that context, two paradigms are used in cryptography to handle the secure deployment, use, and protection of cryptographic keys, namely secret key cryptography and public key cryptography.

Secret Key Cryptography

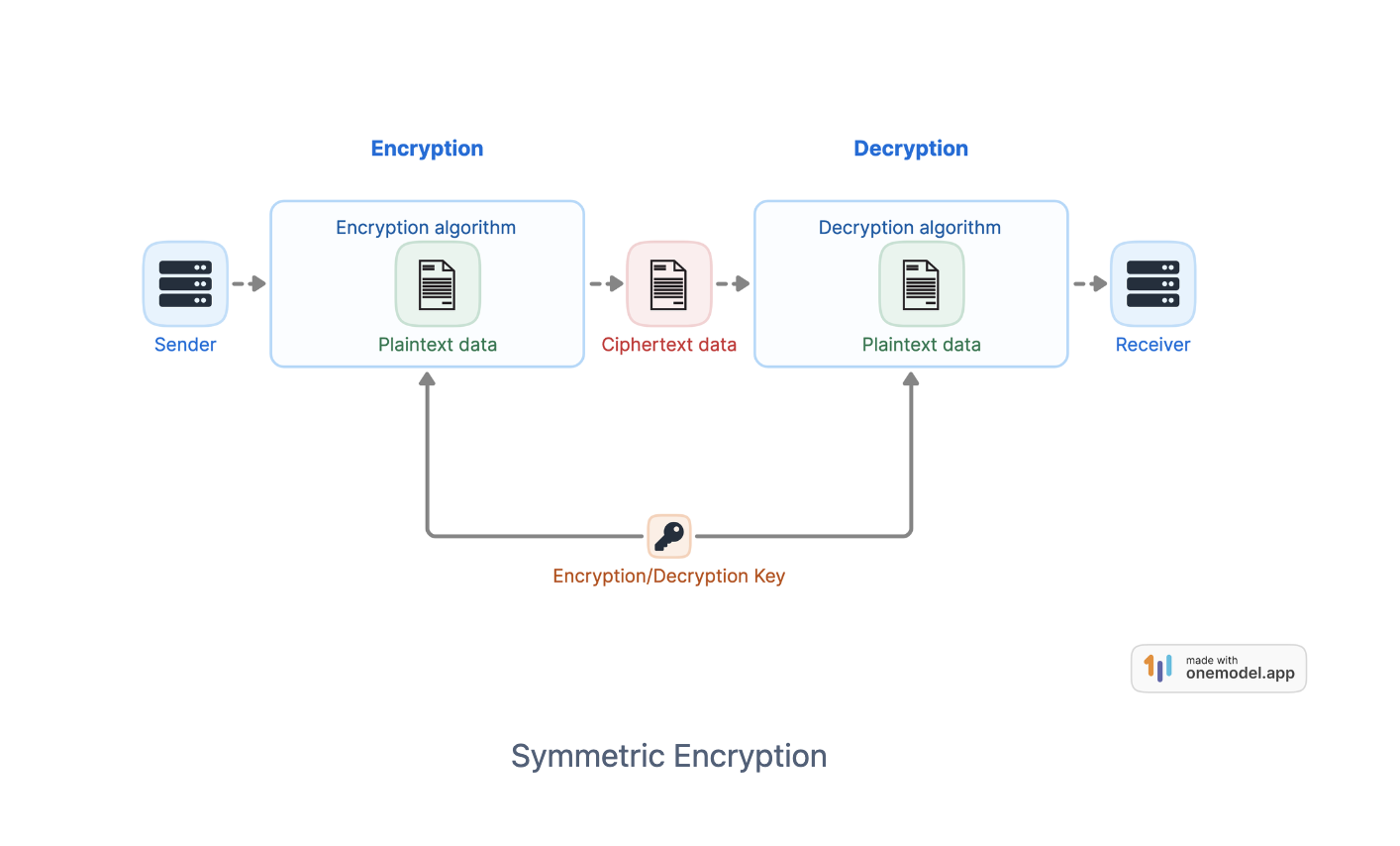

Symmetric encryption

Symmetric encryption, also known as secret key encryption, consists of using the same cryptographic key for both data encryption and decryption. In the figure below, we depict how plaintext can be turned into ciphertext using the cipher and the secret key. The same key is used when the cipher is used in decryption mode to retrieve the plaintext from the ciphertext. A cipher is a set of two algorithms, the encryption algorithm E(K,m) -> c that takes the encryption key K and the message m to be encrypted as parameters and returns the ciphertext c, and the decryption algorithm D(K,c) -> m that is defined as the inverse operation of encryption and decrypts a message back to the original plaintext.

Generating symmetric keys

Symmetric encryption keys are used by two parties to encrypt and decrypt data securely. Generating and sharing keys generated must be done in a secure manner over an insecure channel. Symmetric keys provide the same security level as their number of bits, i.e., a 128-bit key provides 128-bit security (relative to a brute-force attack) and can be generated using a cryptographic pseudorandom number generator. A library like OpenSSL provides a toolkit to generate a random symmetric key.

The Advanced Encryption Standard (AES)

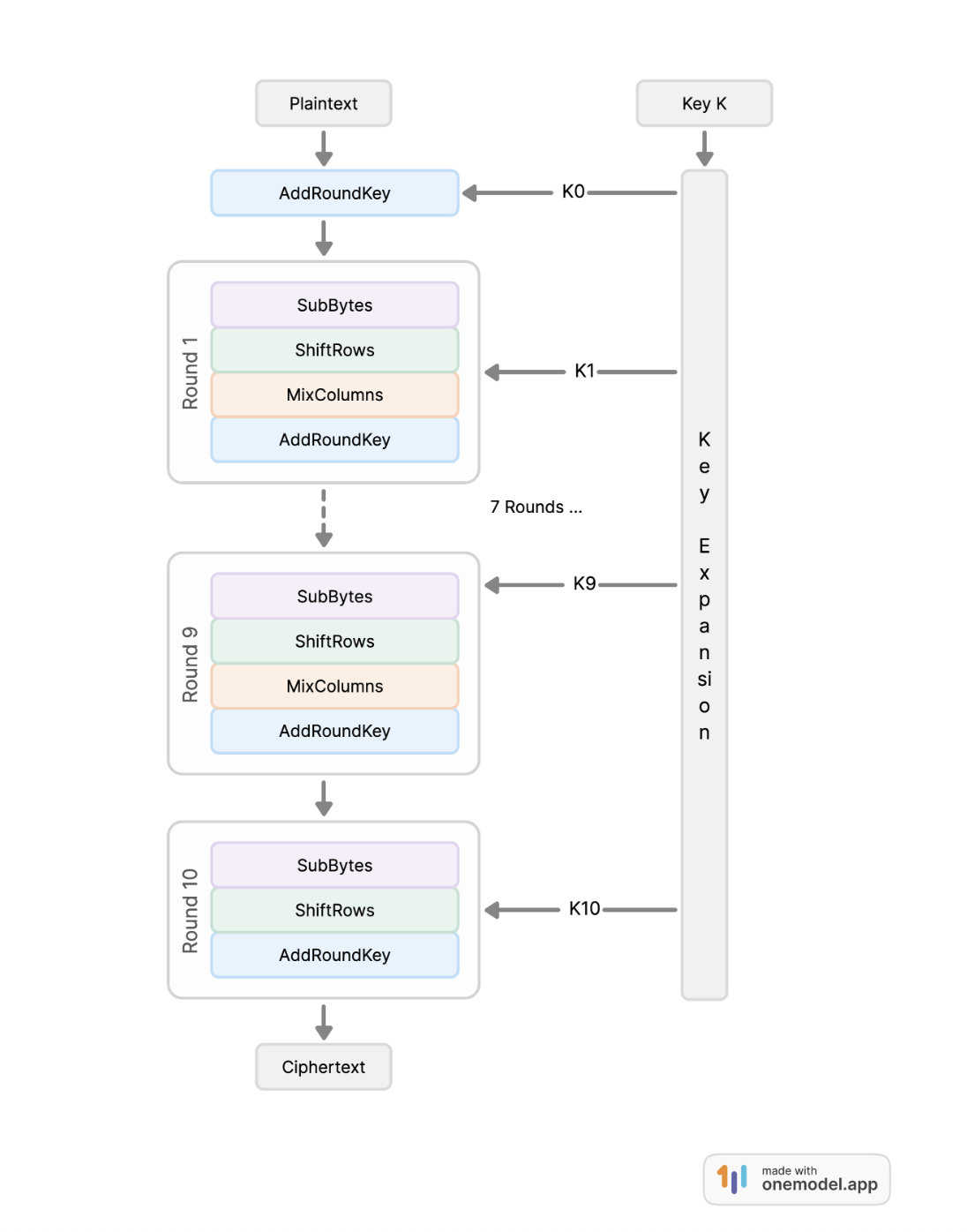

A block cipher is an encryption cipher characterized by two elements, namely its block size and its key size. A plaintext message is divided into blocks of b bits where b is the block size. Said blocks are then processed by the encryption function all at once, adding optional padding at the end when the plaintext size is not an integer multiple of the block size. Most ciphers used for data encryption today are block ciphers due to both their practicality and their efficiency.

The Advanced Encryption Standard (AES) is the most widely used cipher in the world. It was adopted as an encryption standard by the U.S. National Institute of Standards and Technology (NIST) in 2001 for the encryption of electronic data. It replaces the deprecated Data Encryption Standard (DES), which was introduced in 1977. AES specifies a set of symmetric-key algorithms and exists in different variants, namely 128, 192, and 256-bit key sizes with a fixed block size of 128-bit. The AES encryption algorithm applies multiple rounds of substitutions and permutations to the initial plaintext and outputs a ciphertext with the same length as the plaintext. It comprises four sub-algorithms, namely AddRoundKey, SubBytes, ShiftRows, and MixColumns, that provide the round keys and diffusion in the ciphertext.

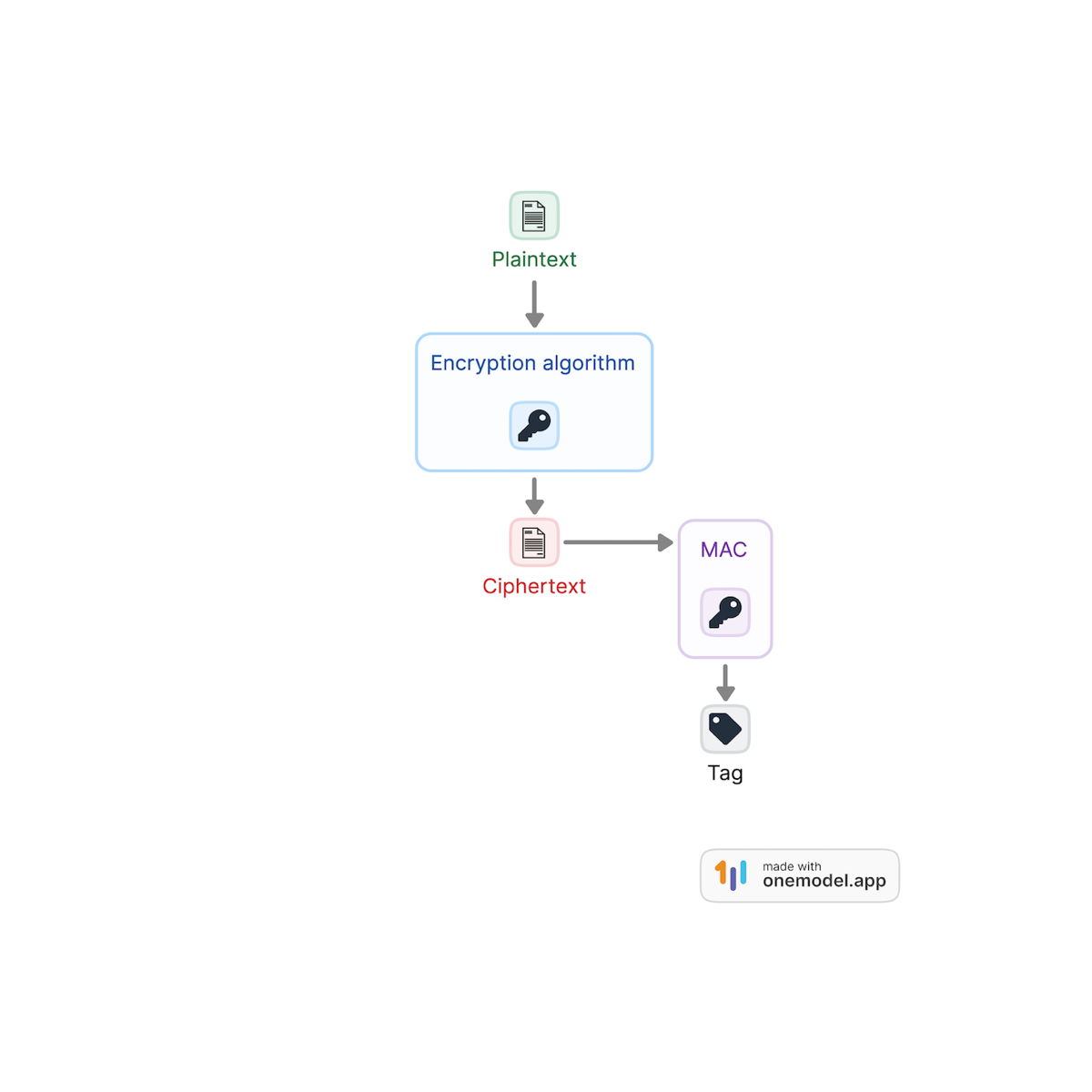

Message Authenticated Code (MAC)

Encryption, whilst the main application of cryptography, does not provide every security guarantee necessary to protect user data. Indeed, user data can still be tampered with in its encrypted state, stored in a database, for example, if proper measures are not applied to check the integrity of said data. Message Authenticated Codes (MACs) produce tags applied to a message in order to ensure its authenticity and integrity. A MAC is a keyed function T = MAC(K,m) that makes it possible for any party that knows the MAC key can verify the integrity of the message by computing the tag on the message and verifying that it corresponds to the received tag. In secure communications and data storage, the practice is to combine a cipher and a MAC in order to ensure data confidentiality, data integrity, and data authenticity.

Public Key Cryptography

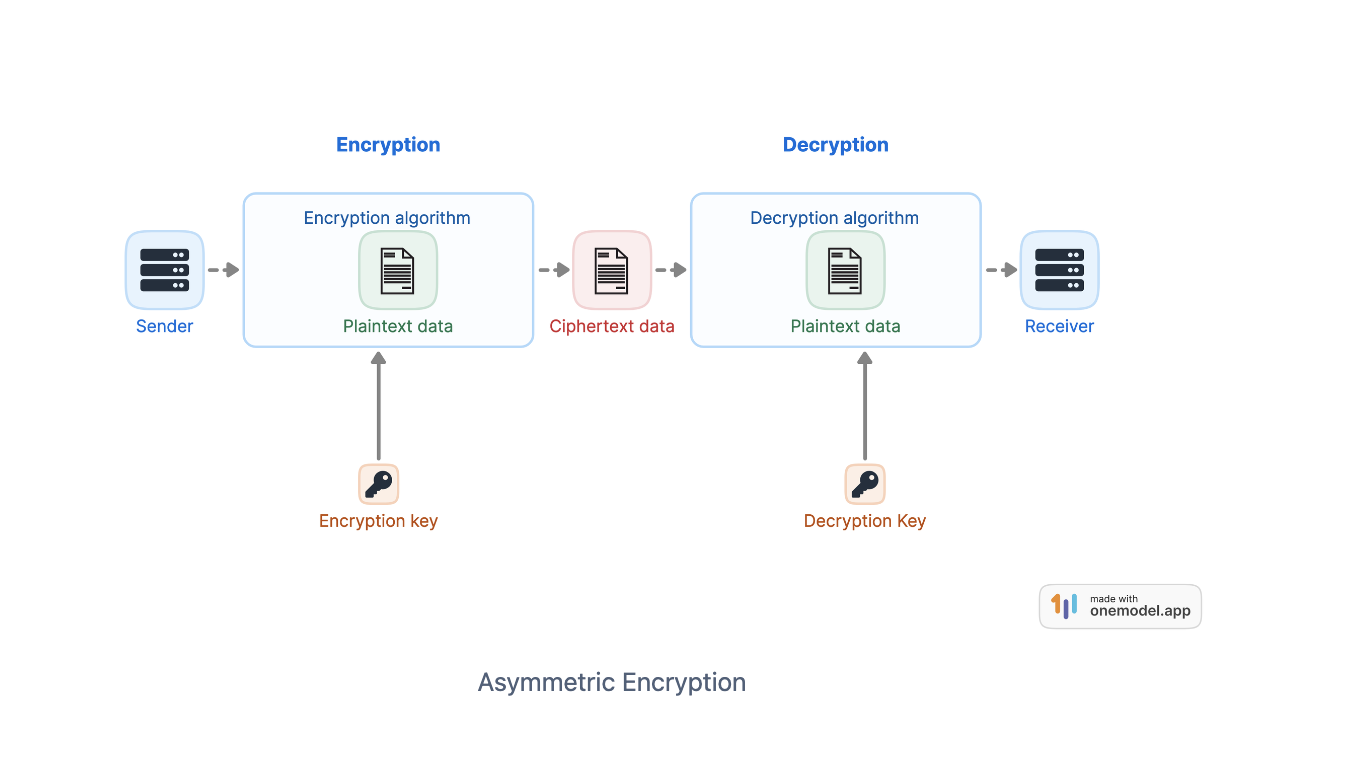

Asymmetric encryption

In asymmetric encryption, there are two keys, one for encrypting and one for decrypting. Asymmetric encryption introduced much more flexibility in secure communications as the encryption key is not kept secret. For example, if a sender, Alice, wants to send a message to Bob, Alice retrieves Bob's public encryption key, which is made available to anyone who wants to encrypt a message and send it to Bob. She then encrypts the message with Bob's key prior to sending the ciphertext to Bob. Only Bob can decrypt the ciphertext using his private decryption key, which remains secret. With symmetric encryption, we had the challenge of establishing a secure channel to share the secret encryption/decryption key prior to the encryption process. This constraint is removed in asymmetric encryption.

Digital signature

A digital signature is the public key equivalent of a MAC. It allows any party to append a signature to a message using a private key. Just like in traditional signatures appended on paper, any party can verify the authenticity of the sender by using a public verification algorithm and a public key. A digital signature scheme is composed of a signing algorithm S(SK,m) -> s that produces a signature s from a private key SK on a message m, and a verification algorithm V(PK,s)->{0,1} that returns a boolean value 0 (or false) when the verification process fails or 1 (or true) when verification succeeds.

Security goals

Randomness

In cryptography, randomness is the beacon by which to measure the security of ciphers. A cipher must be random to be secure; otherwise, all operations would be predictable, therefore, insecure because any attacker could emulate the encryption (again, because it is public) and predict the outcome.

A string of randomly generated bits 001101110001 is characterized by its probability distribution. A probability measures the likelihood of an event occurring. A probability is expressed as a number between 0 and 1. For example, in a fair coin toss, the probability of landing on each side of the coin is equal to 1/2. A probability distribution must include all possible outcomes. In cryptography, we are particularly interested in events with a uniform probability distribution. A uniform distribution is achieved when the probability of all possible outcomes is the same. If we go back to our example of a random string of bits 001101110001, the probability of each bit to be either 0 or 1 is equal to 1/2, and each probability is independent from the next.

The security of encryption schemes and cryptosystems, in general, is dependent on randomness. To achieve the desired security goals, they make use of Random Number Generators (RNGs) and Pseudorandom Number Generators (PRNGs). A random number generator is a source of uncertainty (or a source of entropy) that is usually generated from the environment using hardware (for example, electrical signal or noise), and a pseudorandom generator is a cryptographic algorithm that takes as input the source of entropy to generate bit strings that are indistinguishable from random bit strings. In Unix-based systems, popular PRNG are the /dev/random and /dev/urandom special files, which generate random bits as files.

Computational security

The security goals in cryptography are defined in terms of an attacker's capacity to break the cryptosystem. Unlike in the hardware and software world, it is possible to quantify that security using mathematical models of the attacker's capabilities and goals. Computational security quantifies a cipher's security by requiring that the cipher cannot be broken within a reasonable amount of time and with reasonable computational resources such as memory, hardware budget, etc. For example, in our example cipher E such as c = E(K,m), an attacker may have access to a plaintext/ciphertext pair (m,c). Let the encryption key K be a 128-bit key. Without knowing the value of K, the attacker can try the brute-force approach, which consists in trying all possible 128-bit strings to find the right one that satisfies the equation c = E(K,m). This would take roughly about 2,158,000,000,000 years, which is about 160 times the age of the universe.

Provable security

Technological advances and the computing power of computers today, combined with the intense research on cryptanalysis methods that are much more efficient than brute-force attacks, have forced cryptographers to define security in more pragmatic ways. Notably, the security of ciphers today is often based on mathematical assumptions that have been proven hard to break. Provable security consists in proving that breaking a cryptosystem is as hard as solving a mathematical problem known to be hard. The security of the cryptosystem is said to be reduced to solving the hard problem and can be proven using cryptographic reduction. A widely deployed example is the RSA cryptosystem, whose security is based on the hardness of the factoring problem.

Fundamental public key constructions

The security of modern cryptosystems is based on hard computational problems. These are mathematical problems that are known to be difficult to solve using classical computers. Hard computational problems are the cornerstone of public key cryptosystems, particularly the RSA encryption system and the Diffie-Hellman key exchange protocol.

The RSA encryption scheme

In 1977, three cryptographers, Ron Rivest, Adi Shamir, and Leonard Adleman, introduced a new eponymous public key cryptosystem that is still considered today as one of the most secure public key encryption schemes. RSA uses two keys, a public key that anyone can use to encrypt a message to the receiving party, and a private key that only the receiving party knows and can use to decrypt the ciphertext. The RSA scheme revolutionized secure communication on the Internet as we know it. The security of the RSA (Rivest-Shamir-Adleman) algorithm relies on the hardness of the factoring problem which states that given a large number N, it is difficult to find two prime factors p and q such that N = p × q.

The RSA scheme comprises three algorithms, namely the key generation algorithm, the encryption algorithm, and the decryption algorithm. During the RSA encryption process, a mathematical object called a trapdoor permutation is created. A trapdoor permutation is a function that, given a number x, outputs a number y in the same range (or algebraic group) as x, such that computing y from x is easy using the public key, but the reverse operation, i.e., computing x from y, is difficult without knowing the private key (also known as the trapdoor).

We refer to the RSA scheme as a cryptosystem because, in addition to encryption, it can also be used as a digital signature scheme. In that context, the private key is used to generate a signature on the data and is only known to the signing party, whereas the public key is used in the verification process and can be shared in a public key registry.

The Diffie-Hellman key exchange

Before the introduction of the most well-known public key encryption scheme, i.e., RSA, two Stanford researchers, Whitfield Diffie and Martin Hellman, published a paper titled "New Directions in Cryptography" that introduced the concept of public key encryption and digital signatures. In that paper, they provided a construction for a public key distribution scheme which is the premise of key exchange schemes as we know them today. Indeed, in order to establish a secret between two parties that want to exchange data, for example, the secret key in a symmetric encryption scheme, the parties need to communicate said secret over an insecure channel, i.e., the Internet. A key exchange protocol allows the secure communication of a secret such that no eavesdropping party can retrieve even a single bit of the secret. The Diffie-Hellman (DH) key exchange protocol allows such communication in an efficient and scalable manner. The security of the scheme is based on a computationally hard mathematical problem called the discrete logarithm problem. Similarly to the factoring problem, it builds a trapdoor, i.e., a function that is easy to compute using the public key but is hard to invert without knowing the private key.

Session key sharing

Symmetric key sharing presents great challenges, notably when it comes to sending the key between parties trying to exchange data in a secure manner over an insecure communication channel such as the Internet. Public key cryptosystems, whilst being practical because they eliminate this constraint, are less efficient than symmetric key ciphers for encrypting data. Moreover, to ensure that public key cryptosystems satisfy the most stringent security goals, the size of the encryption key is much larger than the key size of symmetric ciphers. The best practice in cryptography is, therefore, to use a public key scheme such as the RSA or the Diffie-Hellman cryptosystem to encrypt the session key (i.e., the key used to encrypt data) and use a symmetric cipher, such as AES, for example, to encrypt the actual data. This way, the data encryption process is efficient and secure, and the symmetric key used to encrypt data is securely shared between all communicating parties. Nothing is exchanged on the insecure communication channel except the ciphertexts.

Hash Functions

A hash function is an extremely useful tool in the cryptographer's toolkit. They are used in the construction of many cryptographic protocols, including MACs, digital signatures, public key encryption, key agreement protocols, and many others. Algorithms such as MD5, SHA-1, SHA-256, SHA-3, and BLAKE2 are the most well-known hash functions. Whenever you are connecting to a website via HTTPS, sending an email, accessing a file in a cloud storage system, or using SSH to connect to a remote server, a cryptographic hash function is used somewhere by those secure protocols.

A hash function is a function that takes a message of any length and outputs a short, fixed-length message (also known as a digest) of usually 256 or 512 bits. The notion of security for a hash function consists of the algorithm always returning a different digest for a different input message. More specifically, two different messages should never (or more likely with negligible probability) output the same digest. Intensive research and standardization efforts are realized to maintain secure hash function standards. Hash function constructions are routinely subject to attacks, and as such, developers should keep up to date with the latest secure functions in order to avoid introducing vulnerabilities into their systems.

Authenticated Encryption

Authenticated Encryption (AE) combines symmetric encryption with integrity and authenticity verification by appending a tag to the ciphertext. Indeed, encryption alone does not provide those security requirements, and most secure implementations of cryptography must now use this paradigm. An AE cipher AE(K,m) -> (c,t) returns a ciphertext and a short string as the tag. The tag provides integrity and ensures that the ciphertext is identical to the one sent by a legitimate sender. It also provides the authenticity of the ciphertext when there are only two parties, as only a sender in possession of the secret key can produce that ciphertext-tag pair. The security requirement stipulates that no attacker should be able to guess the tag without the key. The decryption process returns the plaintext message from the ciphertext if and only if the tag t is a valid tag. The security requirement for the encryption process remains the same as for any strong cipher.

An AE scheme is constructed by combining a symmetric cipher with a MAC. The most secure approach to this combination is the Encrypt-then-MAC approach, in which the ciphertext is first computed from the plaintext, then a tag is computed on the ciphertext. In the decryption process, the receiver first computes the MAC on the ciphertext to verify that the tag is identical to the one received, then he decrypts the ciphertext once authenticity has been verified.

Key Management

As we have tried to convey throughout this article, the secure management of cryptographic keys is essential to the security of any cryptosystem. In that context, key management is subject to intense standardization efforts, notably the FIPS 140-2 and 140-3 standards, developed by the National Institute of Standards and Technology (NIST). The standards specify the security of components such as Trusted Platform Modules (TPMs) or Hardware Security Modules (HSMs), used in a variety of secure processes ranging from storing and protecting cryptographic keys, providing secure enclaves to perform cryptographic operations (such as key generation, hashes, etc.), to providing secure hardware to support the entire key lifecycle.

Session keys have a defined lifespan. Encryption keys are created, activated, and used, then they expire, and finally, they are destroyed. Key Management Systems (KMSs) are used to securely manage that lifecycle. At Okta, we provide an enhanced KMS with secure key management options for different cloud platforms notably Azure and AWS. The Okta KMS abstracts away the key lifecycle management, which allows developers to focus on their applications without the added overhead of managing an entire key hierarchy. In addition, by using the best security practices in the implementation of the Okta KMS, developers do not take the risk of introducing vulnerabilities into their applications by implementing ad-hoc key management systems.

Cryptography Tomorrow and Challenges

Cryptography on the micro-scale

New paradigms and computing systems have emerged in recent years with the advent of automated and distributed communication and technologies. Machine-to-Machine (M2M) communication and the Internet of Things (IoT) are now pervasive technologies and have permeated a number of industries, ranging from agriculture to health, transport, industrial systems, and transportation systems. Applications in IoT and M2M are based on communication between devices such as sensors and actuators used to collect data from the environment for use cases such as smart agriculture, smart health, smart cars, and smart industrial systems. These new smart devices present a number of constraints in terms of computing power, memory, and security that must be considered when building cryptographic schemes to secure them. They have indeed been the target of a number of attacks due to their deployment model, often on a large scale as nodes in safety-critical applications. Implementing strong cryptography in devices with a strong memory, computing, and security constraints is a challenge that is being tackled by cryptographers. The main goal is to make sure that IoT and M2M devices cannot be compromised or tampered with. New authentication and cryptographic attestation schemes adapted for constrained devices have been developed, and extensive standardization efforts are in progress to regulate this new area of cryptographic application.

Cryptography on the macro scale

Undoubtedly, the future of cryptography is also tied to the advent of quantum computers. Quantum computers are computers that harness phenomena from quantum mechanics, such as superposition and entanglement, to perform operations that classical computers cannot perform. Current small quantum computers have been developed, notably the IBM Q System One, but they are still outperformed by classical computers today.

The realization of large quantum computers, however, will break public-key cryptography as it is today. Indeed, highly performant quantum computers will solve computational problems known to be hard such as the factoring problem underlying the security of the RSA cryptosystem. Quantum computers will have the capability to break many other cryptographic schemes, such as Diffie-Hellman or elliptic-curve cryptography.

Post-quantum cryptography is the cryptography research area tackling cryptographic constructions for a post-quantum world. In 2015, the United States National Security Agency (NSA) called for a transition to the quantum-resistant algorithm, and a subsequent six-year-long NIST standardization competition has followed to develop post-quantum resistant cryptographic algorithms: NIST Announces First Four Quantum-Resistant Cryptographic Algorithms.

These new developments in cryptography are paving the way for new constructions, and their implementation is highly delicate and should conform to standards. Otherwise, they could lead to the introduction of vulnerabilities into cryptosystems and expose user data to malicious attackers, or worse, expose encryption keys completely.

Takeaway

In today's context, the protection of our data and digital footprint and the secure implementation of cryptosystems to achieve a said goal is a sensitive endeavor. It involves highly technical knowledge of a field that is in constant movement, trying to adapt to computing systems that are moving towards both ends of the spectrum. Not only are computing systems getting smaller and smarter with less computing power and memory but storing more data than ever before, we are also facing the imminent advent of quantum computers and quantum technology, with incredible computing power that will transform our computing systems as we know them. Cryptography must adapt to this context, providing secure solutions that will stand the test of time. In that regard, it is highly encouraged to use appropriate and safe implementations of cryptosystems, notably by following specifications and research-based recommendations from the cryptography community. Ad-hoc implementations of cryptography from non-experts can introduce vulnerabilities to systems and technologies offered to customers. The goal at Okta is to provide secure, personalized, and adapted systems that stand the test of time.